Performance testing is a type of testing that evaluates how a system performs under various conditions. It focuses on the speed, response time, stability, reliability, scalability, and resource usage of a software application under a particular workload. The main goal is to identify and eliminate performance bottlenecks in the software application. During performance test executions, several parameters are monitored, including:

- Processor Usage

- Memory Use

- Disk Time

- Bandwidth

- Private Bytes

- Committed Memory

- Memory Pages/Second

- Page Faults/Second

- CPU Interrupts Per Second

- Disk Queue Length

- Network Output Queue Length

- Network Bytes Total Per Second

- Response Time

- Throughput

- Amount of Connection Pooling

- Maximum Active Sessions

- Hit Ratios

- Hits Per Second

- Rollback Segment

- Database Locks

- Top Waits

- Thread Counts

- Garbage Collection

These parameters provide insights into the performance of the system and help identify areas for improvement.

What You'll Learn

CPU, memory and disk usage

Performance testing is a type of software testing that focuses on how a system performs under a particular load. It is not about finding software bugs or defects but rather about providing developers with diagnostic information to eliminate bottlenecks.

During performance test executions, it is important to monitor CPU, memory, and disk usage to identify performance issues and symptoms. This can be done using various tools such as Windows Performance Monitor, which can generate detailed reports with diagnostics and performance summaries. These reports include general performance summaries of the CPU, disk, network, and memory.

CPU, memory, and disk usage can be monitored using tools like OpManager, which can scrutinize system resources on Windows and Unix-based servers, helping to spot performance bottlenecks early on. It provides detailed utilization reports for each CPU instance, allowing for fine-tuning of monitoring configurations.

Other tools like Cacti offer web-based graphing, allowing users to graph CPU, memory, disk space, and more. Microsoft's Performance Monitor (perfmon) is another option that can be used to record Task Manager information about CPU and memory usage over time.

Performance testing involves identifying the testing environment, relevant metrics, and test scenarios, then configuring and implementing the test design while capturing the data generated. This data includes measurements and metrics that define the quality of results, such as average response time and peak response time.

By monitoring CPU, memory, and disk usage during performance test executions, developers can identify issues like insufficient hardware resources, memory leaks, and bottlenecks, ensuring efficient software performance.

DVI Monitor Setup: Where to Hook Up Second Displays

You may want to see also

Network performance

Key Network Performance Metrics:

- Bandwidth: The maximum data transmission rate of a network connection within a specific time frame, typically measured in bits per second (bps) or bytes per second (Bps). Bandwidth utilisation, or network utilisation, refers to the percentage of available bandwidth being used.

- Throughput: The amount of data passing through the network from point A to point B in a given amount of time. Throughput measures the actual data transmission rate and can vary depending on factors such as bandwidth, latency, packet loss, and network congestion.

- Latency: The time it takes for data to reach its destination across a network, usually measured as a round-trip delay in milliseconds. Consistent delays or spikes in latency indicate performance issues.

- Packet Loss: The number of data packets lost during transmission, impacting end-user services as data requests cannot be fulfilled promptly.

- Retransmission: The process of resending lost or dropped data packets to complete a data request. A high retransmission rate indicates network congestion.

- Network Availability: The amount of time a network is accessible and operational, typically expressed as a percentage of uptime over a specific period. The goal is to achieve 100% availability to ensure consistent service delivery.

- Connectivity: The functionality of connections between devices and nodes on the network. Improper or malfunctioning connections can lead to performance issues and downtime.

Tools for Monitoring Network Performance:

- Network Performance Monitoring (NPM) Solutions: These tools help identify factors affecting network performance, such as bandwidth utilisation, latency, packet loss, and retransmission rates. They provide real-time data and alerts, helping IT teams gain insights and make informed decisions.

- Windows Performance Monitor: A tool that generates detailed reports on system performance, including CPU, disk, network, and memory diagnostics. It helps identify hardware or software changes needed for optimal performance.

- TestComplete: A tool that can track performance metrics such as CPU load, memory and disk usage, and error counts in real time during test runs. It helps identify performance bottlenecks in applications.

Blind Spot Monitoring: Is it a Standard Feature on Model Y?

You may want to see also

Response time

When conducting performance tests, it is essential to assess how the application handles requests and how the response time varies with the load. This helps identify potential bottlenecks and optimise the system's performance. Response time testing involves sending a request to the application and measuring the duration until a response is received.

The ideal response time is 0.1 seconds, providing users with an instantaneous experience without any noticeable interruption. A response time of 1.0 second is the highest acceptable threshold, where users may notice a slight delay but do not experience an interruption. Response times exceeding 1 second start to negatively impact the user experience, and anything above 8 seconds will lead to most users abandoning the site or application.

To measure response time accurately, it is important to consider the entire process, including the time taken to send the request and receive the response. This is often referred to as the "round-trip" time. By monitoring response time during performance tests, developers can identify areas where improvements can be made to enhance the user experience and ensure efficient system performance.

Additionally, response time testing can be performed using various tools, such as LoadRunner, JMeter, and k6, which help measure load times and evaluate the system's performance under different load conditions. These tools enable developers to simulate and analyse response times, identify potential issues, and optimise the application's performance to meet user expectations.

Hooking Up TV Monitor Audio: A Simple Guide

You may want to see also

System stability

Error Handling and System Behaviour:

Stability testing focuses on how the system handles errors and its behaviour under stress. This includes tracking the number of errors and exceptions that occur during the test. By monitoring these aspects, testers can identify potential issues and ensure the system can recover from failures.

Resource Utilization:

Monitoring resource utilization is crucial for system stability. This includes tracking CPU load, memory usage, disk usage, and network load. By monitoring these metrics, testers can identify bottlenecks and ensure the system can handle the anticipated load without overloading its resources.

Performance Metrics:

Performance metrics such as response time, throughput, and transaction rates should be closely monitored. These metrics help evaluate the system's performance within acceptable limits. For example, transaction response times can indicate whether the server's performance meets the defined minimum and maximum transaction times.

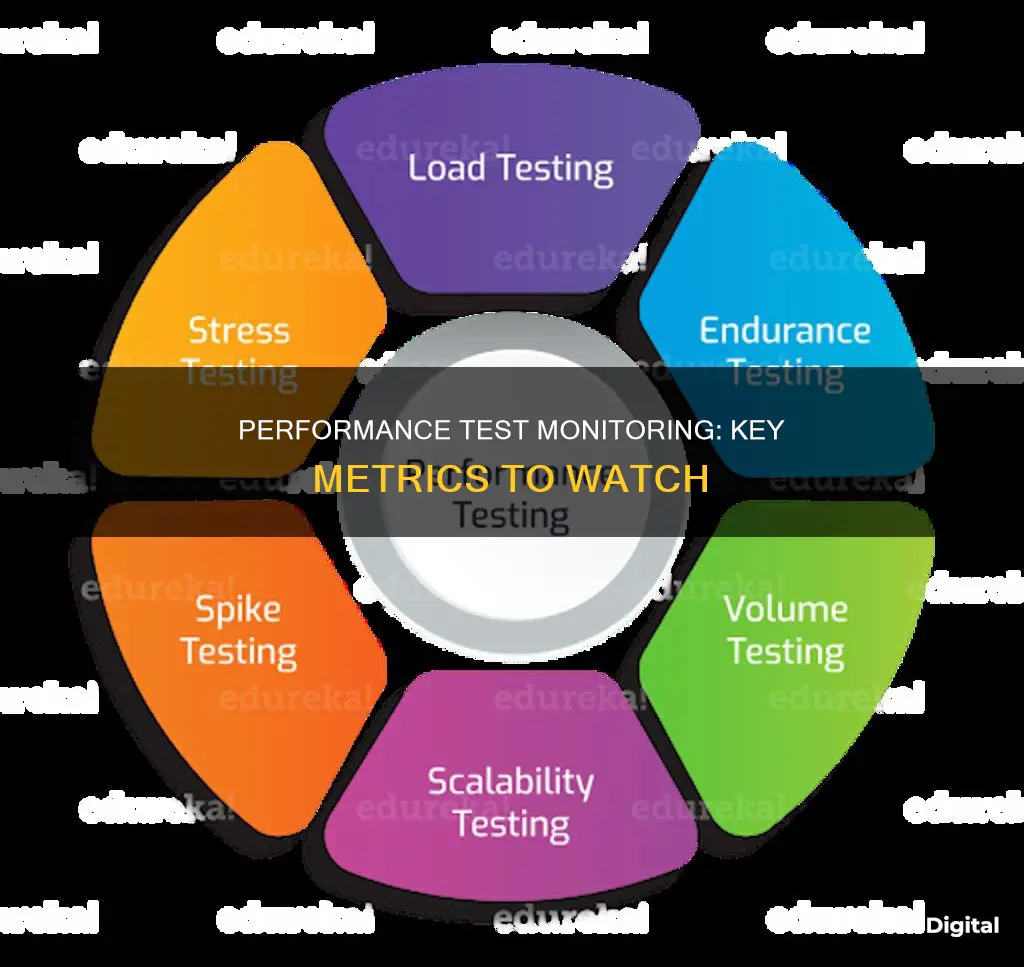

Load Testing:

Load testing involves gradually increasing the workload on the system to evaluate its stability. By simulating a high number of virtual users or transactions, testers can identify the system's breaking point and ensure it can handle anticipated load levels.

Stress Testing:

Stress testing involves pushing the system beyond its normal capacity to identify its limits. This type of testing helps determine the system's robustness and how it recovers from failures. It also reveals the system's behaviour under extreme conditions, such as sharp increases in user activity or data volume.

Endurance Testing:

Endurance testing, also known as soak testing, evaluates the system's performance over an extended period. It helps identify issues that may arise due to prolonged usage, such as memory leaks or functionality problems. By running the system continuously for weeks or months, testers can ensure its stability and reliability.

By monitoring these aspects during performance test executions, developers can ensure system stability, identify potential issues, and optimize the system's performance and resilience.

The Curious Case of Rally B's Ankle Monitor

You may want to see also

Scalability

During scalability testing, the performance of hardware, network resources, and databases is evaluated as the number of simultaneous requests varies. This type of testing is particularly important in containerised environments, where the system's ability to scale effectively is critical.

To conduct scalability testing, you can gradually increase the user load or data volume while monitoring system performance. Alternatively, you can maintain the workload at a constant level while modifying the resources, such as CPUs and memory, to observe how the system adapts.

By performing scalability testing, you can identify potential bottlenecks and ensure that your system can handle an increasing number of users or transactions effectively. It helps to optimise the system's performance and provide a seamless user experience, even as demand fluctuates.

Additionally, scalability testing can reveal issues related to operating system limitations, network configuration, software configuration, and insufficient hardware resources. Addressing these issues is essential for maintaining system stability and responsiveness, which are crucial factors in user experience.

Finding Your Monitor's Native Resolution: A Step-by-Step Guide

You may want to see also

Frequently asked questions

The basic parameters monitored during performance testing include:

- Processor Usage

- Memory Use

- Disk Time

- Bandwidth

- Private Bytes

- Committed Memory

- Memory Pages/Second

- Page Faults/Second

- CPU Interrupts Per Second

- Disk Queue Length

- Network Output Queue Length

- Network Bytes Total Per Second

- Response Time

- Throughput

- Amount of Connection Pooling

- Maximum Active Sessions

- Hit Ratios

- Hits Per Second

- Rollback Segment

- Database Locks

- Top Waits

- Thread Counts

- Garbage Collection

Most performance problems revolve around speed, response time, load time, and poor scalability. Speed is often one of the most important attributes of an application. A slow-running application will lose potential users.

Key objectives of performance testing include:

- Measure the time it takes for a system to respond to a request.

- Assess the number of transactions a system can handle within a specific time frame.

- Monitor CPU, memory, disk, and network usage during testing.

- Determine how well the system scales with an increasing number of users or transactions.

- Ensure the system remains stable under sustained loads.