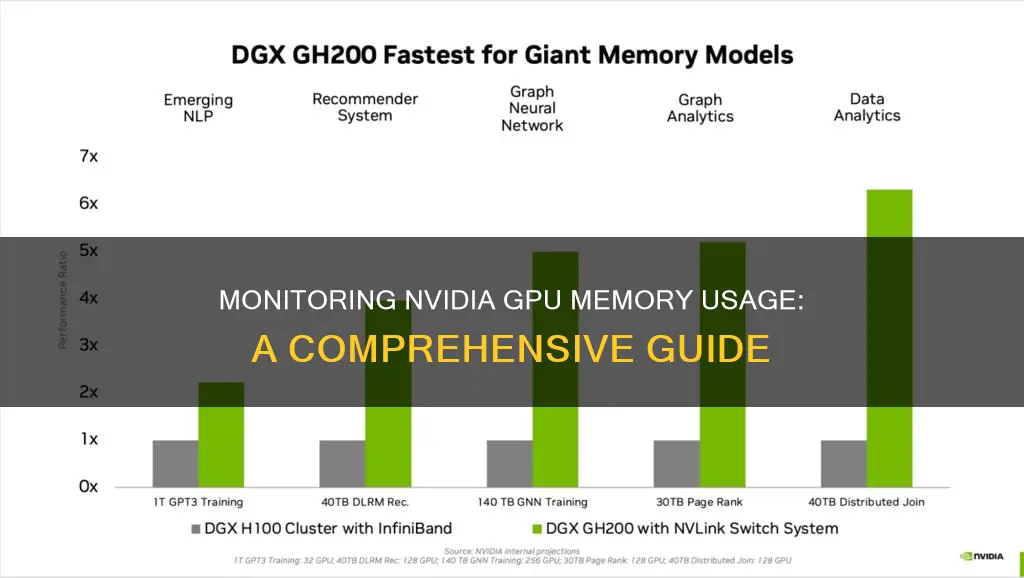

Monitoring the memory usage of an Nvidia GPU is a crucial task for developers and users alike. It helps in understanding the performance and utilisation of the GPU, and aids in identifying any potential issues or bottlenecks. While there are various methods to monitor GPU memory usage, some popular tools include the Nvidia System Management Interface (nvidia-smi), nvtop, and atop. These tools provide detailed insights into GPU memory utilisation, compute processes, temperature, and more. Additionally, the Nvidia Control Panel offers a user-friendly interface to manage and view GPU utilisation, providing a graphical representation of GPU performance over time.

| Characteristics | Values |

|---|---|

| Command to check GPU memory usage | nvidia-settings -q all | grep Memory |

| Alternative command | nvidia-smi |

| Command to check GPU memory usage every second | watch -n 1 nvidia-smi |

| Alternative command to check GPU memory usage every second | nvidia-smi --loop-ms=500 |

| Command to check GPU memory usage every 2 seconds | watch -n 2 nvidia-smi --id=1 |

| Command to check GPU memory usage and utilisation | nvidia-smi --query-gpu=memory.used,memory.total,utilization.gpu |

| Command to check GPU memory usage, utilisation, total memory, free memory, and used memory | nvidia-smi --query-gpu=timestamp,name,utilization.gpu,utilization.memory,memory.total,memory.free,memory.used --format=csv --loop=1 |

What You'll Learn

Using the NVIDIA Control Panel

To monitor your NVIDIA GPU memory usage using the NVIDIA Control Panel, follow these steps:

- Open the NVIDIA Control Panel: Right-click on your desktop and select "NVIDIA Control Panel" from the menu, or search for it in your Start menu.

- Navigate to the 'Manage GPU Utilization' Page: Once the NVIDIA Control Panel is open, go to the "Select a Task" pane under the "Workstation" section. From there, click on "Manage GPU Utilization."

- View GPU Utilization Information: On the "Manage GPU Utilization" page, you will find detailed information about your GPU setup, including:

- The high-end Quadro and Tesla GPUs installed in your system.

- The utilization of each Quadro and Tesla GPU.

- Whether ECC is enabled for each GPU.

- View GPU Utilization Graphs: To see a visual representation of your GPU utilization over time, click on the "GPU Utilization Graphs" option. This will provide you with a graph showing GPU utilization trends.

- Change the Usage Mode of a GPU (Optional): If you need to change the usage mode of a specific GPU, you can do so from the "Manage GPU Utilization" page. Under the Usage Mode section, select the appropriate option for each Quadro card. You can choose between handling professional graphics and compute (CUDA) needs or professional graphics needs only. Please note that Tesla cards are automatically dedicated to compute needs and cannot be changed.

- Apply Changes (Optional): After making any changes to the usage mode, click "Apply" to save your settings.

By following these steps, you can effectively monitor your NVIDIA GPU memory usage and utilization through the NVIDIA Control Panel. This allows you to keep track of how your GPUs are being utilized and make any necessary adjustments to their usage modes.

Monitoring Data Usage: TP-Link Router Guide

You may want to see also

Using the nvidia-smi command

The NVIDIA System Management Interface (nvidia-smi) is a command-line utility that can be used to monitor the performance of NVIDIA GPU devices. It is available on Linux, 64-bit Windows Server 2008 R2, and Windows 7, and can report query information in either XML or human-readable plain text format.

To monitor GPU memory usage using the nvidia-smi command, you can use the following queries:

- To get an overview of GPU memory usage, utilization, and temperature, simply run the `nvidia-smi` command.

- For a more detailed view, including the VBIOS version of each device, use the following query:

$ nvidia-smi --query-gpu=gpu_name,gpu_bus_id,vbios_version --format=csv

To monitor GPU metrics for host-side logging, which works for both ESXi and XenServer, use this query:

$ nvidia-smi --query-gpu=timestamp,name,pci.bus_id,driver_version,pstate,pcie.link.gen.max,pcie.link.gen.current,temperature.gpu,utilization.gpu,utilization.memory,memory.total,memory.free,memory.used --format=csv -l 5

To continuously monitor GPU power and clock details, use this query:

$ nvidia-smi --query-gpu=index,timestamp,power.draw,clocks.sm,clocks.mem,clocks.gr --format=csv -l 1

To monitor GPU usage in real time, with details such as timestamp, GPU name, GPU utilization, memory utilization, total memory, free memory, and used memory, run the following command:

$ nvidia-smi --query-gpu=timestamp,name,utilization.gpu,utilization.memory,memory.total,memory.free,memory.used --format=csv --loop=1

You can adjust the refresh interval by changing the value after `--loop`.

To output GPU utilization, memory utilization, memory in use, and free memory with a timestamp every 5 seconds, use this command:

$ nvidia-smi --query-gpu=timestamp,name,utilization.gpu,utilization.memory,memory.used,memory.free --format=csv -l 5

By default, the output is written to standard output, but you can use the `-f filename` argument to redirect it to a file.

Note that the nvidia-smi command only provides a snapshot of the GPU at a given moment. For a more comprehensive understanding of GPU memory usage, it is recommended to monitor the GPU for a longer period or over multiple iterations of your code.

Fortnite Usage: Monitoring Your Child's Gameplay

You may want to see also

Using nvtop

Nvtop is a GPU and accelerator process monitoring tool for NVIDIA GPUs. It can handle multiple GPUs and print information about them in a familiar way. It is similar to the widely-used htop tool but for NVIDIA GPUs.

Nvtop is available on Ubuntu:

Bash

Sudo apt install nvtop

Nvtop has a built-in setup utility that allows you to customise the interface to your needs. Simply press F2 and select the options that are best for you. You can save your preferences by pressing F12, and they will be loaded the next time you run nvtop.

Nvtop also comes with a manual and command-line options. For quick command-line arguments help, use:

Bash

Nvtop -h nvtop --help

To monitor GPU usage in real-time, you can use the following command:

Bash

Watch -n 2 nvtop

This will run the nvtop command every two seconds, providing updated measures of GPU utilisation and memory. To quit the watch command, press Ctrl-C.

Nvtop also provides a nice, easy-to-read graphical display of the state of the GPU devices. You can examine the GPU and GPU MEM columns to get the GPU utilisation, GPU memory usage, and GPU memory utilisation for your process. A useful feature of nvtop is that the output automatically updates.

Monitoring JVM Heap Usage: Practical Tips for Performance Optimization

You may want to see also

Using atop

The atop command is a powerful UNIX command-line utility for monitoring system resources and performance. It provides real-time information about various aspects of the system, including the CPU, GPU, memory, disk, and network usage.

To use atop to monitor NVIDIA GPU memory usage, follow these steps:

- Determine the node and GPU device number: Use the squeue command to find the node your job is running on, and the scontrol show jobid command to get the GPU device number.

- Generate a report with atopsar: Use the following command structure to generate a report of GPU statistics for a specific node and date: ssh

atopsar -g -r /var/log/atop/ | less. Replace with the node your job is running on, and with the relevant log file, named according to the date. - Interpret the report: The report will display time stamps (in 10-minute intervals), GPU device number, GPU utilisation, and GPU memory utilisation. Use the spacebar to scroll through the report and examine specific time intervals.

Note: The atop log files are available for approximately one month. If there are empty fields for a timestamp, it indicates that the GPU was not utilised during that time. If a report fails to generate, it means the atopgpu service was not running during the logging period.

Monitoring Bandwidth Usage: Control by Device

You may want to see also

Using nvidia-settings

To monitor your Nvidia GPU memory usage, you can use the nvidia-settings command-line utility. Here is a step-by-step guide on how to use it:

- Open a terminal or command prompt on your system.

- Type the following command: `nvidia-settings -q all | grep Memory. This command will display all the available memory-related information for your Nvidia GPU.

- If you want to monitor specific aspects of memory usage, you can use the -q flag followed by the parameter you want to query. For example, to monitor the used dedicated GPU memory, you can use the command: `nvidia-settings -q useddedicatedgpumemory.

- To monitor GPU memory usage continuously in real time, you can use the watch command in combination with `nvidia-settings`. For example, to update the output every 0.1 seconds, you can use the command: `watch -n0.1 "nvidia-settings -q GPUUtilization -q useddedicatedgpumemory".

- You can also pipe the output of `nvidia-settings` to other tools for further analysis or formatting. For example, you can use grep to filter the output for specific memory-related information.

- Additionally, you can use the --id option with `nvidia-settings` to target a specific GPU if you have multiple Nvidia GPUs in your system.

- Keep in mind that `nvidia-settings` may require an active X11 display server to function properly. If you encounter issues, ensure that your display server is running and try again.

By following these steps, you can effectively use `nvidia-settings` to monitor your Nvidia GPU memory usage, gain insights into your system's performance, and make informed decisions about resource allocation and optimization.

Electricity Monitors: Accurate or Deceitful?

You may want to see also

Frequently asked questions

You can use the nvidia-smi command-line utility to monitor the performance of Nvidia GPU devices. To do this, run the nvidia-smi command in your terminal. You can also use the --id option to target a specific GPU. For example, if your code is running on device 1, you can use nvidia-smi --id=1.

To view the utilization of GPUs on Windows, open the Nvidia Control Panel. From the Select a Task pane under Workstation, click on "Manage GPU Utilization". This will provide information such as the utilization of each GPU in the system and whether ECC is enabled for each GPU.

Yes, there are several third-party tools available to monitor Nvidia GPU memory usage. Some popular options include nvtop, nvitop, and GPU Dashboards in Jupyter Lab. These tools often provide additional features and customization options compared to the built-in Nvidia tools.