Monitoring the performance of machine learning models is a critical task that ensures their ongoing accuracy, reliability, and performance. Here are some key reasons why monitoring is essential:

- Performance Tracking: Monitoring helps track key performance metrics such as accuracy, precision, recall, and F1 score, allowing for the detection of deviations from expected standards.

- Concept Drift Detection: Monitoring helps identify when the distribution of input data changes over time, potentially affecting the model's accuracy.

- Data Quality Assurance: Monitoring ensures that the input data fed into the model remains of high quality, as inconsistent or noisy data can negatively impact performance.

- Label Quality: In supervised learning, monitoring helps identify issues with mislabeled data, which is crucial for model accuracy.

- Compliance and Fairness: Monitoring can reveal biases in model predictions, ensuring the model does not exhibit discriminatory behaviour across different demographic groups.

- User Experience and Business Impact: Monitoring allows organisations to track user satisfaction and ensure that the model meets user expectations, contributing positively to the organisation's goals.

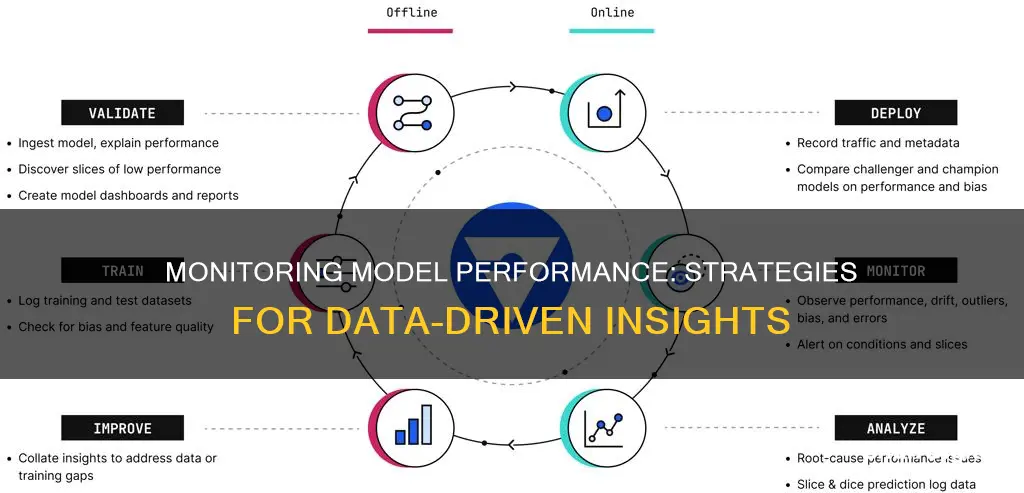

To effectively monitor machine learning models, it is recommended to adopt both functional and operational monitoring approaches:

- Functional Monitoring: This focuses on assessing the model's predictive accuracy and its ability to adapt to changing data. It involves monitoring model metrics such as accuracy, precision, recall, and detecting shifts in data distribution.

- Operational Monitoring: This focuses on the model's real-time performance and interactions within the production environment, including resource utilisation, response time, error rates, and throughput.

By implementing these monitoring strategies, organisations can maintain robust and reliable machine learning systems throughout their lifecycle.

What You'll Learn

- Monitor data quality and integrity to ensure the model is fed data it can handle

- Track data and target drift to detect when the model needs updating

- Measure model performance against actual values to identify issues

- Monitor performance by segment to understand the model's quality on specific slices of data

- Monitor for biases and fairness issues to ensure the model does not exhibit discriminatory behaviour

Monitor data quality and integrity to ensure the model is fed data it can handle

Monitoring data quality and integrity is a critical aspect of ensuring that your machine learning (ML) model performs effectively and generates accurate predictions. Here are some strategies to ensure data quality and integrity for your ML model:

Data Validation and Quality Control:

- Implement automated and manual quality checks at various stages of the data pipeline, including data ingestion, processing, and storage.

- Perform statistical tests to identify outliers or anomalies and conduct visual inspections to uncover inconsistencies or errors.

- Ensure that the data conforms to a predefined schema or structure by validating incoming data against a defined schema.

- Examine the data to understand its characteristics, properties, and relationships, using data profiling techniques to identify anomalies, inconsistencies, and errors.

- Correct inaccuracies, duplicate entries, and inconsistencies in the data through data cleansing processes.

- Establish and enforce validation rules to prohibit the introduction of invalid data into the system, maintaining data integrity.

- Formulate data quality metrics to assess data quality and monitor improvements over time, providing insights into the effectiveness of data validation efforts.

Robust Data Pipelines and Data Engineering Best Practices:

- Design and implement scalable, reliable, and fault-tolerant data pipelines to ensure precise ingestion, processing, and storage of data.

- Utilize automated tools for data ingestion, minimizing manual intervention and reducing potential errors.

- Clean, normalize, and preprocess data to ensure it conforms to the appropriate format required by the ML model, promoting consistency and compatibility.

- Implement error handling mechanisms to gracefully manage data pipeline failures and maintain data integrity during unforeseen events.

- Continuously monitor data pipeline performance, log errors, and generate alerts for swift issue resolution, preserving data reliability.

- Adopt standardized formats for data storage to enhance consistency and ease of access across different systems.

- Apply data caching and partitioning techniques to optimize data retrieval and processing performance.

- Utilize distributed computing technologies, such as Apache Spark, to efficiently process large volumes of data.

Data Governance and Cataloging:

- Appoint data stewards responsible for overseeing data quality and adherence to data governance policies, ensuring data integrity and trustworthiness.

- Develop a centralized data catalog that records information about data sources, attributes, and metadata, streamlining data lineage tracking and managing data dependencies effectively.

- Establish role-based access controls to ensure that only authorized users can access and modify data, preserving data integrity and preventing unauthorized changes.

- Create and enforce well-defined data governance policies that provide clear guidance on data handling, storage, and usage practices, promoting a uniform approach to data management.

- Regularly monitor compliance with data governance policies and regulatory standards, swiftly identifying and addressing any discrepancies or issues.

Data Versioning and Lineage Tracking:

- Employ data versioning tools to track and manage dataset modifications, storing historical snapshots to provide a comprehensive view of data evolution over time.

- Implement lineage tracking solutions to follow data from its source through various transformations, simplifying debugging processes and impact analysis for data-related concerns.

- Maintain detailed metadata for each dataset version, documenting information about data quality, transformations, and dependencies, which can be leveraged for informed decision-making and problem-solving.

- Ensure that data versioning and lineage tracking practices comply with auditing requirements, promoting transparency and accountability in data management processes.

- Develop a robust change management process to address data modifications and understand their effects on the overall system, preserving data integrity.

Monitoring Data and Concept Drift:

- Implement automated monitoring systems to detect drifts in data distribution, patterns, and relationships, generating alerts when significant shifts occur.

- Observe alterations in the underlying relationships between features and the target variable, and update or retrain the model as necessary to maintain accuracy and relevance.

- Utilize advanced drift detection techniques, such as statistical tests, to proactively identify changes in data or concepts, enabling timely responses to potential issues.

- Establish a procedure for regular model retraining and updating using up-to-date data to mitigate the negative effects of data and concept drift, ensuring optimal model performance.

- Monitor key performance metrics of ML models to gain insights into model behavior and facilitate the detection of drift-related problems.

Data Privacy and Security:

- Employ data anonymization techniques, such as masking or pseudonymization, to safeguard sensitive data while preserving privacy and utility.

- Utilize encryption methods to secure data at rest and in transit, protecting it from unauthorized access and potential breaches.

- Establish role-based access controls to manage access to data, limiting data exposure to only authorized users.

- Perform regular audits to verify compliance with data privacy and security regulations, identify potential vulnerabilities, and ensure adherence to industry standards.

- Develop and implement an incident response plan to address potential security breaches, minimize damage, and facilitate swift recovery.

Identifying Your ASUS Monitor: A Step-by-Step Guide

You may want to see also

Track data and target drift to detect when the model needs updating

Tracking data and target drift is essential to detect when a machine learning model needs updating. This process involves monitoring changes in the input data and the desired output, also known as the target, over time. Here are some steps to effectively track data and target drift:

- Check the data quality: Ensure that the data is accurate and consistent. Validate the schema of the data in production matches the development data schema. Detect and address any data integrity issues, such as missing values, data type mismatches, or schema changes.

- Investigate the drift: Plot the distributions of the drifted features to understand the nature and extent of the drift. Determine if it is data drift, concept drift, or both. Data drift occurs when the input data distribution changes, while concept drift happens when the relationship between the input and the desired output changes.

- Retrain the model: If labels or ground truth data are available, consider retraining the model on the new data. This involves updating the model with the latest data to improve its performance and adaptability to changing conditions.

- Calibrate or rebuild the model: In cases where retraining is insufficient, consider calibrating or rebuilding the model. This involves making more significant changes to the training pipeline, such as testing new model architectures, hyperparameters, or feature processing approaches.

- Pause the model and use a fallback: If labels are not available or the data volume is insufficient for retraining, consider pausing the model and using alternative decision-making strategies, such as human expert judgment, heuristics, or rules-based systems.

- Find low-performing segments: Identify segments of the data where the model is underperforming and focus on improving its performance for those specific segments. This can be done by defining the segments of low performance and routing predictions accordingly.

- Apply business logic on top of the model: Make adjustments to the model's output or decision thresholds to align with business requirements and mitigate the impact of drift. This approach requires careful consideration and testing to avoid unintended consequences.

- Proactively monitor and intervene: Implement regular monitoring and testing procedures to detect drift early on. Intervene proactively by retraining the model, updating the training data, or addressing specific issues identified through monitoring.

ELMB ASUS Monitors: How Does It Work?

You may want to see also

Measure model performance against actual values to identify issues

Measuring model performance against actual values is essential to identify issues and ensure the model is effective and reliable. This process involves comparing the model's predictions with the known values of the dependent variable in a dataset. By doing so, we can quantify the disagreement between the model's predictions and the actual values, which helps us understand how well the model is performing and whether it meets the desired objectives.

- Goodness-of-fit vs. Goodness-of-prediction: Goodness-of-fit evaluates how well the model's predictions explain or fit the dependent-variable values used for developing the model. On the other hand, goodness-of-prediction focuses on how well the model predicts the value of the dependent variable for new, unseen data. Goodness-of-fit is typically used for explanatory models, while goodness-of-prediction is applied to predictive models.

- Model evaluation metrics: The choice of evaluation metrics depends on the nature of the problem and the type of data. For classification problems, common metrics include accuracy, precision, recall, F1-score, etc. For regression problems, metrics such as mean squared error (MSE), mean absolute error (MAE), R-squared, and others are used.

- Data visualization: Visualizing the model's performance can provide a clearer understanding of its behaviour and uncover patterns or anomalies in the data. Techniques like confusion matrices, precision-recall curves, ROC curves, and scatter plots can help assess the model's performance on classification and regression tasks.

- Case studies and best practices: Studying real-world examples and best practices can provide valuable insights into how models perform in practical scenarios. By learning from the experiences of others, we can avoid common mistakes, make informed decisions, and improve our model evaluation techniques.

- Addressing data integrity issues: It is important to validate the consistency of feature names, data types, and detect any changes or missing values in the production data schema compared to the development data schema. This helps identify potential issues that may impact model performance.

- Detecting data and concept drift: Data drift occurs when the probability distribution of input features changes over time, which can be due to changes in data structure or real-world events. Concept drift, on the other hand, refers to changes in the relationship between features and target labels over time. Monitoring and detecting data and concept drift is crucial for identifying shifts in data distribution and model behaviour.

- Model and prediction monitoring: Continuously evaluating the model's performance on real-world data is essential. Monitoring tools can help automate this process and notify you of significant changes in metrics such as accuracy, precision, or F1-score. This allows for early detection of issues and helps maintain optimal model performance.

- Comparing with baseline models: Comparing the performance of your model with baseline or expert prediction models can provide insights into potential improvements. By training simple models on new data and monitoring drifts in performance or feature importance, you can identify when it's time to retrain or update your production model.

- A/B testing and automated retraining: A/B testing involves running two models simultaneously and comparing their performance, which helps evaluate the effectiveness of a new model without fully deploying it. Automated retraining is important for models with swift data changes, where an online learning approach can be beneficial.

Mounting Monitors: Are Screws Universal for Wall Mounts?

You may want to see also

Monitor performance by segment to understand the model's quality on specific slices of data

Monitoring performance by segment is crucial for understanding a machine learning model's quality on specific slices of data. This technique, known as model slicing, allows for a detailed analysis of the model's performance on specific features or data segments, providing valuable insights into its effectiveness and any potential issues.

Model slicing involves evaluating the model's predictions against the actual target values, then breaking down this comparison into univariate or multivariate analyses. By selecting specific features or segments of the data, practitioners can assess and compare the model's performance on these subsets, identifying areas where the model excels or underperforms.

This approach offers several benefits, including enhanced transparency, interpretability, and focused improvement efforts. It also enables continuous monitoring, allowing for the detection of performance discrepancies that might otherwise be overlooked. Additionally, it facilitates scenario-specific evaluation, ensuring the model's robustness across diverse data subsets and conditions.

When monitoring performance by segment, it is important to track relevant evaluation metrics such as accuracy, precision, recall, and F1-score for each segment. This provides a detailed understanding of the model's performance and can help identify areas that require improvement or refinement.

Furthermore, model slicing can be used to address issues related to fairness and bias in machine learning models. By monitoring performance across different demographic groups, for example, practitioners can identify any disparities in model accuracy or other metrics, ensuring fair treatment and compliance with ethical and legal standards.

In conclusion, monitoring performance by segment is a powerful technique for understanding a model's quality on specific slices of data. It provides nuanced insights, enhances interpretability, and facilitates continuous improvement and governance of machine learning models.

HP Envy Monitor Sizes: What Options Are Available?

You may want to see also

Monitor for biases and fairness issues to ensure the model does not exhibit discriminatory behaviour

Monitoring for biases and fairness issues is a critical aspect of machine learning model development and deployment. Models can inadvertently perpetuate biases present in the training data, which, if not addressed, can have serious ethical and legal implications, especially if the model operates in sensitive areas such as hiring, lending, or healthcare. Therefore, it is essential to ensure that the model's decisions are fair and unbiased. Here are some strategies to monitor for biases and fairness issues:

- Identify Potential Sources of Bias: Bias can arise from missing feature values, unexpected feature values, or skewed data representation in your dataset. For example, missing temperament data for certain breeds of dogs in a rescue dog adoptability model could lead to less accurate predictions for those breeds.

- Evaluate Model Performance: Break down model performance by subgroup to ensure fairness. For instance, in a rescue dog adoptability model, evaluate performance separately for each dog breed, age group, and size group to ensure the model performs equally well for all types of dogs.

- Use Fairness Metrics: Fairness metrics provide a systematic way to measure and address biases. Examples include statistical parity (demographic parity), equal opportunity, predictive parity, and treatment equality. These metrics help identify and quantify bias, evaluate the trade-offs between accuracy and fairness, and guide model development to create more equitable outcomes.

- Monitor Key Performance Indicators (KPIs): Define relevant KPIs that align with your model's objectives and higher-level business objectives. Continuously monitor these KPIs to detect significant changes or deviations from established benchmarks.

- Detect and Address Model Drift: Model drift occurs when the relationship between input data and model predictions changes over time, leading to performance degradation. Monitor for data drift, concept drift, and prediction drift to ensure the model remains accurate and relevant as the environment or data evolves.

- Establish a Cross-Functional Monitoring Strategy: Involve stakeholders from various teams, such as engineering, data science, customer success, product, and legal, in your monitoring program. This ensures a comprehensive monitoring approach and enables quick and efficient responses to issues.

- Automate Monitoring and Alerts: Leverage monitoring tools and platforms to automate data collection, analysis, and alerting. Set up notifications for relevant stakeholders when performance metrics deviate from defined thresholds.

- Conduct Regular Reviews: Periodically evaluate the model's effectiveness, alignment with business objectives, and fairness. Adjust monitoring strategies as needed based on changes in data, requirements, or model performance.

- Document and Share Findings: Document monitoring results, analyses, and corrective actions. Share findings with relevant stakeholders to facilitate informed decision-making and improve transparency and accountability.

Hooking Up a Third Monitor: Unlocking the Power of 1060

You may want to see also

Frequently asked questions

Some key metrics to monitor include accuracy, F1-score, recall, precision, nDCG, mean squared error, and AUC-ROC.

There are several methods to monitor machine learning models in real-time, including the use of coarse functional monitors, non-linear latency histogramming, normalizing user response data, and comparing score distributions to reference distributions and canary models.

ML model monitoring provides insights into model behaviour and performance in production, alerts when issues arise, and provides actionable information to investigate and remediate these issues.

Good and bad model performance depends on the underlying application. For example, a 1% accuracy decrease for pneumonia detection from X-ray images in a hospital system has very different implications from a 1% accuracy decrease for animal classification in an Android app.

When choosing the right cloud-based HPC resource for AI and machine learning workloads, consider factors such as the specific requirements of your workloads, the performance and scalability of the resources, and the cost and availability of the resources.