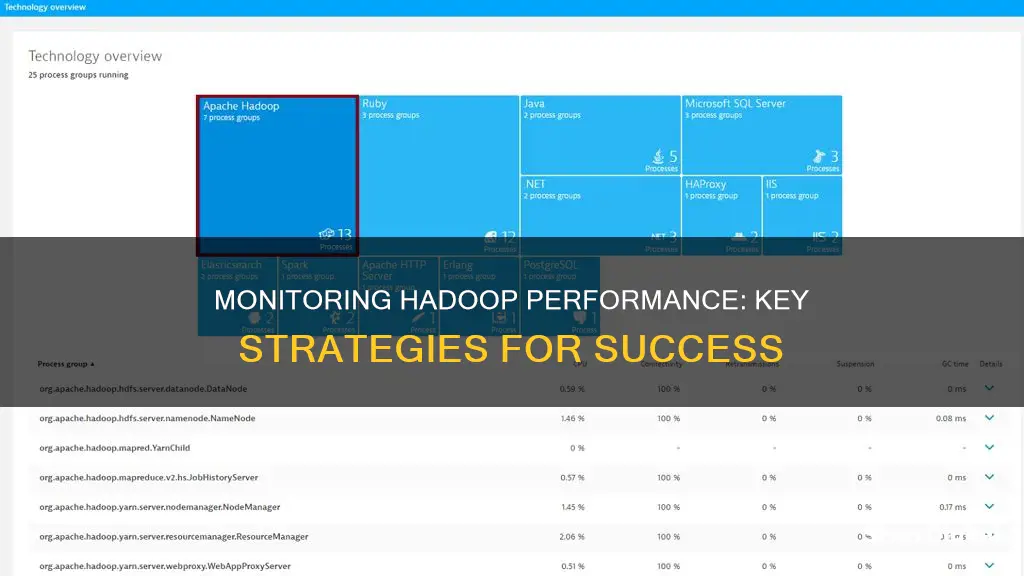

Monitoring Hadoop performance is essential to ensure the efficient processing and storage of data. Hadoop's Distributed File System (HDFS) is a critical component, providing reliable and scalable data storage. Effective monitoring of HDFS performance metrics helps identify bottlenecks and optimise the system's overall performance. Various tools are available for monitoring Hadoop, including cluster monitoring tools and application performance monitoring solutions. While cluster monitoring tools notify of failures, application performance monitoring solutions provide additional insights into where and why something failed, and alert the responsible team.

| Characteristics | Values |

|---|---|

| NameNode metrics | DFS Capacity Utilization, Heap Memory Statistics, Non Heap Memory Statistics, Number of concurrent file access connections across DataNodes, Number of under-replicated blocks, Number of blocks pending for replication, Number of scheduled replications, Number of fatal, error and warning logs, Number of new, running, blocked, waiting and terminated threads, Total number of allocated blocks, missing blocks and blocks with corrupted replicas, CPU Utilization, Memory Utilization, Disk Utilization, Availability |

| DataNode metrics | DFS space used by the DataNode, Number of blocks cached, Heap Memory Statistics, Non Heap Memory Statistics, Failed Cache Blocks, Failed Uncache Blocks, Number of fatal, error and warning logs, Number of new, running, blocked, waiting and terminated threads, Number of failed volumes |

| YARN metrics | Number of completed applications, Number of running and pending applications, Number of failed and killed applications, Number of unhealthy, lost, active, decommissioned and rebooted node managers, Total amount of reserved, allocated and available memory, Number of reserved and allocated virtual cores, Number of allocated and reserved containers |

| Cluster monitoring tools | Nagios, OpsView, Sensu, StatsD, CollectD, MetricBeat, Prometheus, Netdata, DataDog, JMX, System monitoring tools, Check_mk, Elastic Beats, WinLog, Hortonworks Data Platform, Cloudera Data Analytics, Site24x7, LabEx Monitoring, Dynatrace |

| Application performance monitoring solutions | Pepperdata, Cloudera Manager, Apache Ambari, Driven |

What You'll Learn

Monitoring NameNode metrics

NameNode-emitted metrics:

- DFS Capacity Utilization: This includes the used and free space in the DFS cluster, as well as the total number of files tracked by the NameNode.

- Heap Memory Statistics: This covers the current heap memory used and committed in GB.

- Non-Heap Memory Statistics: This includes the current non-heap memory used and committed in GB.

- File Access Connections: The number of concurrent file access connections across DataNodes is monitored.

- Block Replication: The number of under-replicated blocks, blocks pending for replication, and those scheduled for replication are tracked.

- Log Information: The number of fatal, error, and warning logs is recorded.

- Thread Information: The number of new, running, blocked, waiting, and terminated threads is counted.

- Allocated Blocks: The total number of allocated blocks, along with any missing or corrupted blocks, is monitored.

- Node Information: All the nodes associated with the cluster are listed.

- Resource Utilization: The CPU, memory, and disk utilization of the NameNode are tracked.

- NameNode Availability: The availability status of the NameNode, either "Up" or "Down," is monitored.

NameNode JVM metrics:

- Garbage Collection: The Java Virtual Machine (JVM) relies on garbage collection processes to free up memory. The frequency and duration of these collections can impact the performance of the NameNode.

- Memory Allocation: Monitoring the amount of memory allocated to the JVM is important to ensure efficient NameNode operation.

By closely monitoring these NameNode metrics, administrators can gain valuable insights into the health and performance of their Hadoop cluster, enabling them to optimize resource utilization, detect and resolve issues promptly, and maintain high availability and performance for their big data applications.

Monitoring Tableau Report Usage: A Comprehensive Guide

You may want to see also

Monitoring DataNode metrics

DataNode metrics are host-level metrics specific to a particular DataNode.

Remaining Disk Space

Running out of disk space can cause a number of problems in a cluster. If left unrectified, a single DataNode running out of space could quickly cascade into failures across the entire cluster as data is written to an increasingly shrinking pool of available DataNodes. Tracking this metric over time is essential to maintain a healthy cluster; you may want to alert on this metric when the remaining space falls dangerously low (less than 10% remaining).

Number of Failed Storage Volumes

By default, a single volume failing on a DataNode will cause the entire node to go offline. Depending on your environment, that may not be desired, especially since most DataNodes operate with multiple disks. If you haven’t set dfs.datanode.failed.volumes.tolerated in your HDFS configuration, you should definitely track this metric. Remember, when a DataNode is taken offline, the NameNode must copy any under-replicated blocks that were lost on that node, causing a burst in network traffic and potential performance degradation.

To prevent a slew of network activity from the failure of a single disk (if your use case permits), you should set the tolerated level of volume failure in your config like so:

Where N is the number of failures a DataNode should tolerate before shutting down.

Bytes Written/Read

BytesWritten and BytesRead are the total number of bytes written to and read from the DataNode.

Blocks Written/Read/Replicated/Verified/Removed

BlocksWritten and BlocksRead are the total number of blocks written to and read from the DataNode. BlocksReplicated is the total number of blocks replicated by the DataNode. BlocksVerified is the total number of blocks verified by the DataNode. BlocksRemoved is the total number of blocks removed from the DataNode.

Volume Failures

VolumeFailures is the total number of volume failures on the DataNode.

Cache Used/Capacity

CacheUsed is the total amount of cache used by the DataNode. CacheCapacity is the total cache capacity of the DataNode.

Failed Cache Blocks

FailedCacheBlocks is the total number of blocks that failed to be cached by the DataNode.

Failed Uncache Blocks

FailedUncacheBlocks is the total number of blocks that failed to be removed from the cache of the DataNode.

Fatal/Error/Warning Logs

FatalLogs, ErrorLogs, and WarningLogs are the total number of fatal, error, and warning logs, respectively, for the DataNode.

New/Running/Blocked/Waiting/Terminated Threads

NewThreads, RunningThreads, BlockedThreads, WaitingThreads, and TerminatedThreads are the total number of new, running, blocked, waiting, and terminated threads, respectively, for the DataNode.

Failed Volumes

FailedVolumes is the total number of failed volumes on the DataNode. Although a failed volume will not halt the Hadoop cluster performance, it is important to know why such failures occur.

Raising the PB287Q: A Step-by-Step Guide to Adjusting Your ASUS Monitor

You may want to see also

Monitoring YARN metrics

YARN metrics can be grouped into three distinct categories: cluster metrics, application metrics, and NodeManager metrics.

Cluster Metrics

Cluster metrics provide a high-level view of YARN application execution. These metrics are aggregated cluster-wide. Some key cluster metrics to monitor include:

- Number of unhealthy nodes: YARN considers a node unhealthy if its disk utilisation exceeds the value specified under `yarn.nodemanager.disk-health-checker.max-disk-utilization-per-disk-percentage` in `yarn-site.xml. Unhealthy nodes should be quickly investigated and resolved to ensure uninterrupted operation of the Hadoop cluster.

- Number of active nodes: This metric tracks the current count of active or normally operating nodes. It should remain static unless there is anticipated maintenance. If a NodeManager fails to maintain contact with the ResourceManager, it will be marked as "lost" and its resources will become unavailable for allocation.

- Number of lost nodes: Nodes can lose contact with the ResourceManager due to network issues, hardware failure, or a hung process.

- Number of failed applications: If the percentage of failed map or reduce tasks exceeds a specific threshold, the application as a whole will fail.

- Total amount of memory/amount of memory allocated: Memory is a critical resource in Hadoop. If you are nearing the ceiling of your memory usage, you can add new NodeManager nodes, tweak the amount of memory reserved for YARN applications, or change the minimum amount of RAM allocated to containers.

Application Metrics

Application metrics provide detailed information on the execution of individual YARN applications. Some key application metrics include:

Progress: This metric gives a real-time window into the execution of a YARN application, with a reported value between zero and one (inclusive). Tracking progress alongside other metrics can help determine the cause of any performance degradation.

NodeManager Metrics

NodeManager metrics provide resource information at the individual node level. Some key NodeManager metrics include:

- Number of containers that failed to launch: Configuration issues and hardware-related problems are common causes of container launch failure.

- Total number of launched containers: The total number of successfully completed containers, failed containers, and killed containers.

- Current allocated memory in GB: The current available memory and virtual cores.

Connecting Xbox 360 to an HDMI Monitor: A Step-by-Step Guide

You may want to see also

Monitoring ZooKeeper metrics

Understanding ZooKeeper's Role

ZooKeeper plays a crucial role in ensuring the availability of the HDFS NameNode and YARN's ResourceManager. It provides coordination services within a distributed system, allowing distributed processes to communicate and synchronise.

Key Metrics to Monitor

- Zk_followers: This metric represents the number of followers in the ZooKeeper ensemble. A change in this value should be monitored as it indicates a user intervention such as a node being decommissioned or commissioned.

- Zk_avg_latency: Average request latency measures the time it takes for ZooKeeper to respond to a request. Spikes in latency could impact the transition between servers during a ResourceManager or NameNode failure.

- Zk_num_alive_connections: This metric tracks the number of connected clients, including connections from non-ZooKeeper nodes. A sudden drop in this value may indicate a loss of connection to ZooKeeper, reducing the cluster's resilience to node loss.

Tools for Monitoring ZooKeeper

There are several tools available for monitoring ZooKeeper metrics:

- JMX and Four-Letter Words: JMX (Java Management Extensions) allows access to ZooKeeper metrics via MBeans and a command-line interface using "four-letter words". The ruok command can be used to verify ZooKeeper's status, and mntr provides various metrics including zk_followers, zk_avg_latency, and zk_num_alive_connections.

- ZooKeeper Monitoring Software: Tools like Applications Manager provide detailed insights into Znodes, watchers, and ephemeral nodes in the ZooKeeper cluster. It helps to collect all relevant metrics, display performance graphs, and alert administrators of potential issues.

- Datadog Integration: Datadog's Agent software can be installed on Hadoop nodes to collect, visualise, and monitor ZooKeeper metrics. It offers a comprehensive dashboard and the ability to set up automated alerts for potential issues.

By closely monitoring these ZooKeeper metrics and utilising appropriate tools, administrators can ensure the optimal performance and availability of their Hadoop clusters.

Ankle Monitor Speculations: Ellen's Fashion Choice Explained

You may want to see also

Using a Hadoop monitoring tool

There are several Hadoop monitoring tools available, and these can be used to monitor the performance of the applications running on your cluster(s). These tools can provide insights into the health of the cluster, such as the capacity of the DFS, the space available, the status of blocks, and the optimisation of data storage.

Site24x7

Site24x7 is a cloud-based package that can be expanded with plugins, including one for Hadoop monitoring. It also examines server resource utilisation. It offers a 30-day free trial.

ManageEngine Applications Manager

This package of monitoring systems runs on Windows Server, Linux, AWS, and Azure, and it specialises in examining the performance of servers and the software that runs on top of them. It offers a 30-day free trial.

Datadog

Datadog is a cloud monitoring tool with a customisable Hadoop dashboard, integrations, alerts, and more. It provides a collection of integrations that tie together to monitor Hadoop. These are for HDFS, MapReduce, YARN, and Zookeeper. It also monitors server metrics. It offers a free trial.

LogicMonitor

LogicMonitor is an infrastructure monitoring platform with a Hadoop package that can monitor HDFS NameNode, HDFS DataNode, Yarn, and MapReduce metrics. It collects Hadoop metrics through a REST API. It offers a free trial.

Dynatrace

Dynatrace is an application performance management tool with Hadoop monitoring capabilities. It can automatically detect Hadoop components and display performance metrics for HDFS and MapReduce. It offers a 15-day free trial.

FireTV Monitors: Worth the Buy?

You may want to see also

Frequently asked questions

Some key metrics to monitor for Hadoop performance include:

- NameNode metrics: Number of active RPC clients, total number of files and directories, total number of data blocks, and percentage of total storage capacity used.

- DataNode metrics: Amount of data cached, amount of storage space used by HDFS, remaining storage space, and total number of data blocks stored.

- Other metrics: Number of failed applications, number of unhealthy nodes, number of active nodes, and number of dead DataNodes.

There are several tools available for monitoring Hadoop performance, including:

- Site24x7

- Datadog

- LabEx Monitoring

- Dynatrace

- Hortonworks Data Platform (HDP)

- Nagios

- OpsView

- Sensu

- Prometheus

- DataDog

- check_mk

- Elastic Beats

- MetricBeat

Some best practices for monitoring Hadoop performance include:

- Monitoring both the health and performance of Hadoop.

- Collecting Hadoop metrics using tools such as JMX queries or command-line tools.

- Adjusting configuration parameters such as block size, replication factor, and buffer sizes to optimise performance.

- Identifying and addressing bottlenecks, such as high CPU utilisation, network congestion, or disk I/O issues.