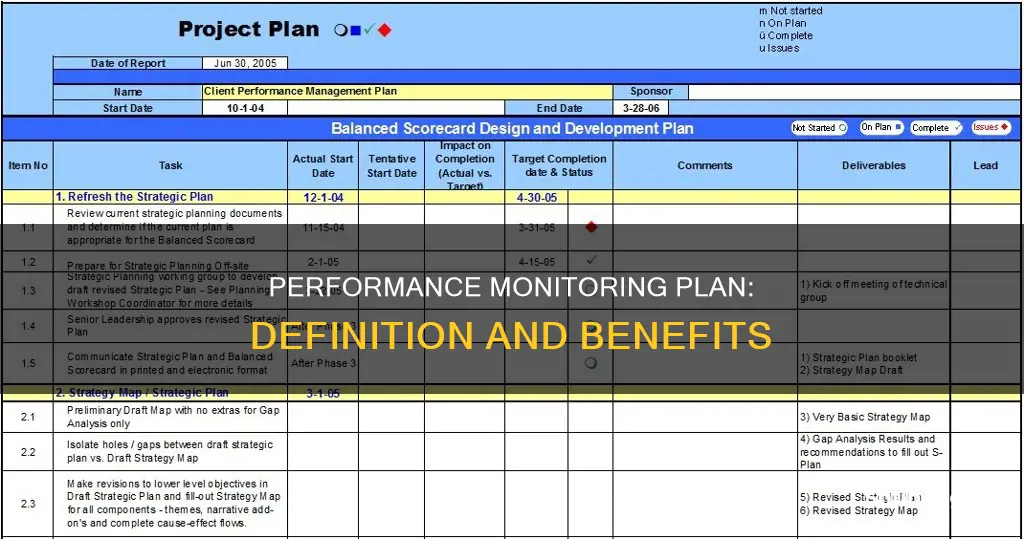

A performance monitoring plan (PMP) is a reference document that outlines the targets and indicators that will be used to monitor and evaluate the performance of a project or program. It includes information on data collection methods, sources, frequency, and responsibilities, as well as planned analysis and reporting procedures. The purpose of a PMP is to help organisations set up and manage the process of monitoring, analysing, evaluating, and reporting progress towards their objectives. It is designed to ensure that comparable data is collected consistently over time and that data is used efficiently to inform decision-making and improve programs.

| Characteristics | Values |

|---|---|

| Purpose | To track and assess the results of interventions throughout the life of a program |

| Type of Document | Reference document, living document |

| Frequency of Use | Regularly updated |

| Contents | Targets, definition of each indicator, methods of data collection, frequency of data collection, responsible person/office, data quality assessment procedures, data limitations, data analysis issues, baselines and targets |

| Common Elements | Data collection method, data collection source, frequency of collection, person(s) responsible, baseline and target values, planned analysis/use, frequency/type of reporting, date of data quality reviews |

| Development | Developed by research team or staff with research experience, with inputs from program staff involved in designing and implementing the program |

What You'll Learn

Performance indicators

On the other hand, outcome indicators measure the success of program activities in achieving the defined objectives. They address the question, "Have program activities made a difference?". These indicators evaluate the impact and assess whether the interventions have led to the desired changes or improvements. Examples of outcome indicators include the percentage of youth using condoms during their first intercourse, the number and percentage of trained health providers offering family planning services, or the number and percentage of new STI infections among youth.

When selecting performance indicators, it is essential to consider their relevance, measurability, and ability to provide meaningful insights. Each indicator should be clearly defined, with a specific unit of measurement assigned to quantify the change or achievement. For instance, if the indicator is "% increase in Household Income", the unit of measurement would be "%age". Additionally, data disaggregation is employed, where the indicator measurement is further classified based on factors such as geography, gender, age, or education. This allows for a more nuanced understanding of how the project impacts different demographics.

The selection of performance indicators should involve a thoughtful process, weighing various considerations and trade-offs. While the optimal indicator may not always be the most cost-effective, documenting the rationale behind the choice of indicators is important. This documentation helps new staff members comprehend the decision-making process and facilitates auditing purposes.

Evolution of Blind Spot Monitoring in Vehicles

You may want to see also

Data collection methods

Qualitative Methodologies:

Qualitative data collection methods offer valuable insights into the perspectives, experiences, and behaviours of individuals or groups. These methods include:

- Interviews: In-depth discussions with key informants or targeted groups can provide nuanced information and context. For example, Key Informant Interviews.

- Focus Groups: Group discussions facilitate the exploration of attitudes, beliefs, and experiences related to the project.

- Observations: Direct observation of behaviours and interactions in specific contexts can offer insights that complement other data sources.

Quantitative Methodologies:

Quantitative data collection methods focus on gathering numerical data and measuring specific indicators. These methods are essential for tracking progress and identifying trends:

- Surveys and Questionnaires: Structured sets of questions administered to a defined population provide standardised data that can be easily compared over time.

- Secondary Data Analysis: Examining existing data sets, reports, or studies can provide additional context or support for primary data collection.

- Performance Data: Collecting metrics and logs on workload performance, including throughput, latency, and completion times, enables the identification of bottlenecks and optimisation opportunities.

- Resource Performance Data: Monitoring resource usage and capacity can inform planning and allocation decisions.

- Connector Performance Data: Measuring the time taken for integrated services operations helps evaluate the efficiency of workload design.

- Database and Storage Performance Data: Collecting data on database operations, responsiveness, and capacity utilisation supports the identification of bottlenecks and reliability improvements.

Data Collection Tools:

To facilitate effective data collection, various tools can be employed, including:

- Survey Software: Online survey tools enable the efficient distribution and collection of survey data.

- Data Management Solutions: Centralised databases allow for secure management and analysis of large data sets.

- Application Performance Monitoring (APM) Tools: APM tools specifically focus on monitoring and reporting application performance, including metrics, traces, and logs.

- Code Instrumentation: Embedding code snippets into the application allows for the capture of performance metrics and the identification of bottlenecks.

- Logic Models: Graphic illustrations that explain how a project's activities lead to desired results, helping establish data collection methods and clarify assumptions.

By employing a combination of these data collection methods and tools, organisations can ensure they gather accurate and comprehensive data to support their performance monitoring and evaluation efforts.

Adjusting Monitor Size with DisplayFusion: A Simple Guide

You may want to see also

Data quality assessment

A Performance Monitoring Plan (PMP) is a reference document that outlines the targets, definitions of indicators, data collection methods, and other key aspects of a project. It is an essential tool for setting up and managing the process of monitoring, analysing, evaluating, and reporting progress towards achieving specific objectives. One of the critical components of a PMP is Data Quality Assessment (DQA).

The process of conducting a DQA typically begins with the development of the PMP and continues throughout the project's lifecycle. It is recommended that organisations conduct DQAs themselves rather than outsourcing them to third-party experts. This allows for a more comprehensive understanding of the data and facilitates continuous improvement.

To perform a DQA effectively, several tools and methodologies are available. For instance, the USAID Data Quality Assessment Checklist helps assess performance data against five quality standards: validity, integrity, precision, reliability, and timeliness. Additionally, the MEASURE Evaluation project offers a range of tools and methods for data quality auditing (DQA) and routine data quality assessment (RDQA). These tools enable external audit teams to conduct comprehensive data quality audits, while RDQA is designed for capacity building and self-assessment.

When conducting a DQA, it is essential to have a clear understanding of the indicators and their precise definitions. The methodology for data collection should be well-defined and consistently applied across all partners involved in the project. Documented evidence of data verification is also crucial, ensuring the accuracy and reliability of the reported data.

In conclusion, Data Quality Assessment is a critical aspect of a Performance Monitoring Plan. By conducting thorough DQAs, organisations can ensure the accuracy, reliability, and timeliness of their data, leading to more effective decision-making and improved project outcomes. Regular DQAs help identify limitations and address data quality issues promptly, contributing to the overall success of the project.

Performance Monitoring Framework: Definition and Application

You may want to see also

Data limitations

One common data limitation is the availability and accessibility of data. In some cases, the required data may not be easily accessible due to factors such as data sensitivity, privacy concerns, or logistical challenges. For example, data related to individuals' personal information or health records may be restricted to protect confidentiality. In such cases, alternative data sources or methods should be considered, such as anonymised data or aggregated statistics.

Another limitation could be the quality and consistency of the data. Data quality issues may arise due to factors such as human error, equipment malfunction, or inconsistencies in data collection methods. It is important to establish data quality assessment procedures to identify and address these issues. This may involve implementing data validation checks, cross-referencing data sources, or utilising advanced data cleaning techniques to identify and rectify inaccuracies or outliers in the data.

Additionally, the timeliness and frequency of data collection can also pose limitations. In some situations, real-time or up-to-date data may not be readily available, leading to delays in monitoring and evaluation processes. This could be due to the inherent nature of the data collection process, such as the time required for surveys or interviews to be conducted and compiled, or the periodic nature of certain data sources, such as annual reports or statistical releases. To mitigate this, it is crucial to define the frequency and timing of data collection, ensuring that it aligns with the monitoring requirements while being feasible and cost-effective.

Furthermore, data limitations may arise due to changes in the project context or environment. Over time, the dynamics of the project area may evolve, leading to new challenges that impact data collection. For instance, a natural disaster or a shift in government policies could affect the availability and accessibility of data sources. It is important to anticipate and plan for such changes, developing strategies to address potential limitations and ensure the continuity of data collection.

To address data limitations effectively, it is crucial to identify potential challenges in advance, assess their impact, and develop appropriate strategies. This may involve seeking alternative data sources, implementing data quality assurance measures, optimising the frequency and timing of data collection, or establishing contingency plans to adapt to changing circumstances. By proactively addressing data limitations, organisations can enhance the robustness and reliability of their performance monitoring plans.

Fixing Horizontal Lines on LCD Monitors: A Step-by-Step Guide

You may want to see also

Data analysis

A PMP outlines the methods of data collection, the frequency of data collection, and the responsible personnel for each indicator being monitored. This structured approach ensures that data is gathered consistently and can be compared over time. The analysis of this data is a complex process that involves multiple steps and stakeholders.

Firstly, the data collected needs to be compiled and organised in a meaningful way. This may involve using spreadsheets, databases, or specialised software, depending on the nature and volume of the data. The compilation process ensures that data from various sources is centralised and easily accessible for further analysis.

Next, the compiled data is scrutinised to identify patterns, trends, and deviations from expected outcomes. This analytical process involves statistical techniques, performance metrics, and domain knowledge to interpret the data accurately. Analysts examine the data within the context of the specific project or programme to derive insights and conclusions.

The insights gained from data analysis are then documented and communicated to relevant stakeholders. This typically involves creating reports, visual representations, or dashboards that convey the findings clearly and concisely. Effective communication of analytical results is essential to ensure that decision-makers across the organisation can interpret the data and take appropriate actions.

Moreover, data analysis in the context of a PMP often involves comparing actual performance against predefined targets and baselines. By establishing key performance indicators (KPIs) and setting measurable targets, organisations can assess whether they are on track to achieve their objectives. This comparative analysis highlights areas of success, as well as areas that require improvement or course correction.

Finally, the results of data analysis inform decision-making and strategic planning. Based on the insights gained, organisations can adjust their strategies, refine their processes, and optimise their interventions. This feedback loop ensures that the performance monitoring plan is dynamic and adaptive, enabling organisations to continuously improve their operations and work towards their goals more effectively.

Troubleshooting an ASUS Monitor That Keeps Blacking Out

You may want to see also

Frequently asked questions

A performance monitoring plan (PMP) is a reference document that contains targets, definitions of indicators, methods of data collection, and frequency of data collection. It is a tool to help set up and manage the process of monitoring, analyzing, evaluating, and reporting progress toward achieving objectives.

A performance monitoring plan includes the following:

- Performance indicators

- Unit of measurement

- Data disaggregation

- Responsible office/person

- Frequency and timing

- Data collection methods

- Data quality assessment procedures

- Data limitations and actions to address them

- Data analysis issues

- Baselines and targets

A performance monitoring plan is important because it provides a clear structure for monitoring and evaluating the results of interventions throughout a program's life. It ensures efficient data usage and enables reporting on results at the end of the program.