Depth mode in cameras, also known as Time-of-Flight (ToF) cameras, is a setting that helps determine the difference between the camera and the subject of an image. This is typically measured with lasers or LEDs. Depth cameras are designed to calculate the distance from the device to an object or the distance between two objects. This enables machines to have a 3D perspective of their surroundings and is used in applications such as autonomous vehicles, robotics, and facial recognition.

What You'll Learn

- Depth cameras are sensors that determine the distance between the camera and the subject of an image

- Time-of-flight technology is used in robotics and autonomous vehicles to help them navigate their surroundings

- Depth cameras on smartphones are used in conjunction with other lenses to create a depth effect

- Depth-sensing cameras enable machines to have a 3D perspective of their surroundings

- Depth of field (DoF) is the zone within a photo that appears acceptably sharp and in focus

Depth cameras are sensors that determine the distance between the camera and the subject of an image

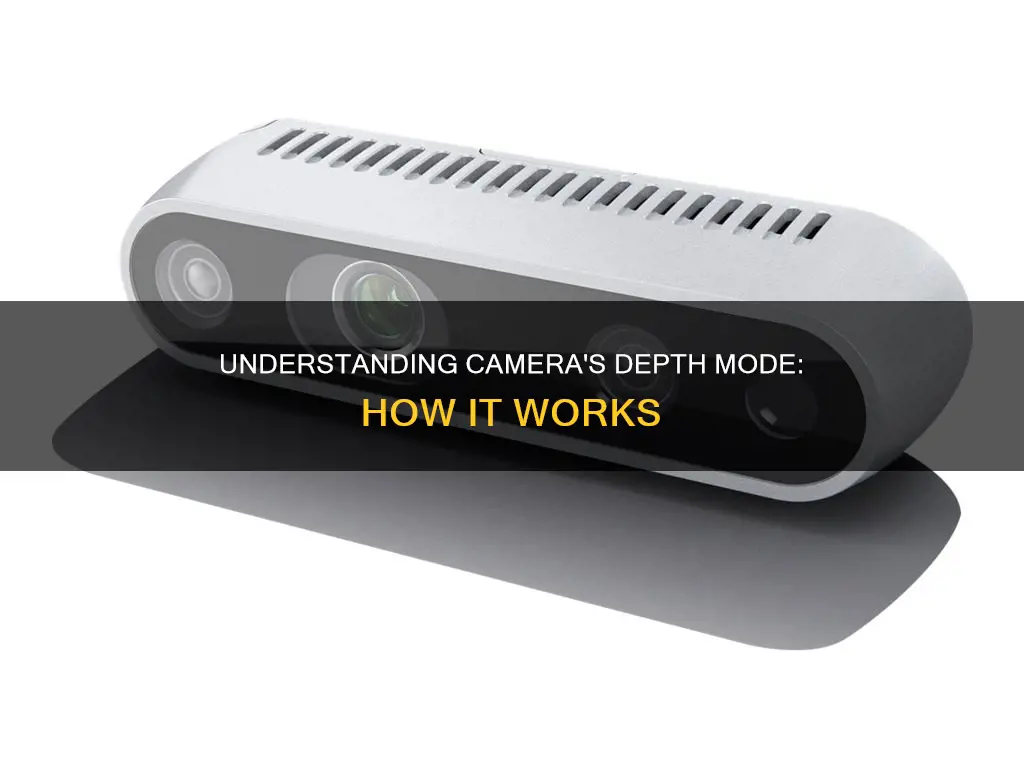

Depth cameras, also known as Time-of-Flight (ToF) cameras, are sensors designed to determine the distance between the camera and the subject of an image. This process is known as depth sensing.

Depth sensing is the measuring of distance from a device to an object or the distance between two objects. A 3D depth-sensing camera is used for this purpose, which automatically detects the presence of any object nearby and measures the distance to it in real-time. This helps the device or equipment integrated with the depth-sensing camera move autonomously and make intelligent decisions.

Depth cameras work by flooding the scene with light and calculating the time it takes for the light to reach the object and reflect back to the sensor. This is known as the Time-of-Flight (ToF) principle, which refers to the time taken by light to travel a given distance. The sensor collects the light data reflected from the target object and converts it into raw pixel data. This raw pixel data is then processed to extract depth information.

Depth cameras have a wide range of applications, including robotics, autonomous vehicles, facial recognition, and object recognition. They enable machines to have a 3D perspective of their surroundings, allowing them to understand spatial relationships, detect obstacles, and navigate complex environments with precision.

There are different types of depth cameras, including stereo sensors, Structured Light sensors, and LiDAR sensors. Stereo sensors use two or more lenses to capture different views of a scene and calculate depth information based on the disparity between the images. Structured Light sensors project a known pattern of light onto a scene and analyze the distortions in this pattern to deduce depth information. LiDAR, or Light Detection and Ranging, uses laser beams to measure distances and is known for its high accuracy and long-range capabilities.

Polaroid Camera Battery Requirements: What You Need to Know

You may want to see also

Time-of-flight technology is used in robotics and autonomous vehicles to help them navigate their surroundings

Time-of-flight technology is a depth-sensing method that uses light to calculate the distance to an object. It is a popular solution for robotics and autonomous vehicles, enabling them to navigate their surroundings seamlessly.

Time-of-flight (ToF) cameras work by measuring the time it takes for light to travel from the camera to an object and back. This is achieved by emitting pulses of invisible infrared laser light and analysing the light reflected by objects. By calculating the time interval between each pulse, the distance to the object can be determined. ToF cameras consist of a sensor, a lighting unit, and a depth processing unit, providing robots with 3D depth data of their environment.

In robotics, ToF technology is used to enable robots to detect objects and avoid obstacles. This is particularly useful for applications such as warehouse automation, where robots need to navigate through narrow spaces and avoid collisions. With ToF cameras, robots can create 3D maps of their surroundings, identify their position within a known map, and plan their path accordingly. This technology also assists in object recognition, allowing robots to match 3D images of objects to predefined parameters.

In autonomous vehicles, ToF cameras play a crucial role in enabling them to navigate without human intervention. These vehicles can use ToF technology to detect obstacles, create maps of unknown environments, and localise themselves within a map. This information helps autonomous vehicles make real-time decisions and adjust their routes accordingly, ensuring safe and efficient navigation.

ToF cameras offer several advantages for robotics and autonomous vehicles. They are compact, lightweight, and relatively inexpensive. They can operate in low-light conditions or complete darkness since they provide their own illumination. ToF cameras also provide high-resolution depth data, with accuracy ranging from 1mm to 1 cm, depending on the operating range. Additionally, they can scan an entire scene in a single frame, providing depth-sensing data at high speeds.

However, ToF cameras also have some limitations. In brightly lit conditions or outdoors, ambient light can interfere with the laser emitters, reducing their effectiveness. Highly reflective surfaces or retroreflective materials can also confuse the cameras. Despite these challenges, advancements in ToF technology are being made to improve its robustness and flexibility.

Mastering Manual Focus: Camera Techniques for Sharp Images

You may want to see also

Depth cameras on smartphones are used in conjunction with other lenses to create a depth effect

Depth cameras, also known as Time-of-Flight (ToF) cameras, are sensors designed to determine the distance between the camera and the subject of an image. ToF technology is used in various applications, including robotics and autonomous vehicles, where tracking objects is crucial.

Depth cameras on smartphones work in conjunction with other lenses to enhance the camera's capabilities. While a depth camera cannot be used independently to capture photos, it assists the other lenses in judging distances. It helps outline the subject and applies a blur effect to the rest of the image, creating a depth effect. This is often combined with software algorithms to achieve the desired result.

For instance, some iPhone models feature a "TrueDepth" camera, primarily used for Face ID facial recognition. The TrueDepth camera accurately maps the geometry of the user's face for secure authentication. Additionally, it is used for photography when shooting in Portrait Mode with the front-facing camera.

It is worth noting that dedicated depth cameras are not essential for flagship smartphones, as similar depth effects can be achieved using other hardware and software solutions. However, some smartphone models do incorporate depth cameras, contributing to the multi-camera setups often seen in modern devices.

Depth cameras in smartphones provide distance data, aiding other lenses in producing depth effects. This combination of depth cameras with ultra-wide or telephoto lenses enhances the overall photography experience, offering both regular and enhanced zoom capabilities.

In summary, depth cameras on smartphones work in conjunction with other lenses to create depth effects in images. While not a necessity, they can enhance the camera's functionality and provide users with additional creative options for their photography.

How to Ensure Your Camera Charges Efficiently While Switched Off

You may want to see also

Depth-sensing cameras enable machines to have a 3D perspective of their surroundings

Depth-sensing cameras are an essential component of modern innovations in robotics, automation, and autonomous vehicles, as they provide machines with the ability to perceive and interact with their surroundings in three dimensions. This technology is crucial for machines to navigate and understand their environment with minimal human intervention.

Depth-sensing cameras work by measuring the distance between the camera and an object or between two objects. This process, known as depth-sensing or depth mapping, allows machines to create a 3D perspective of their surroundings. One of the key applications of this technology is in autonomous vehicles, where depth-sensing cameras help the vehicle seamlessly navigate its environment.

Time-of-Flight (ToF) cameras are a commonly used type of depth-sensing technology. ToF cameras work by emitting pulses of invisible infrared laser light and measuring the time it takes for the light to reflect back to the sensor. This technology can provide accurate depth information, with a range of 1mm to 1 cm, and is relatively inexpensive. ToF cameras are also compact and can be integrated into small devices, including smartphones.

Another type of depth-sensing technology is Structured Light cameras, which use a projector to illuminate the scene with patterns of light. By observing distortions in the reflected pattern, these cameras can compute the depth and contours of objects. Structured Light cameras produce highly accurate depth data but are typically effective only at short operating ranges.

Depth-sensing cameras are also used in various other applications, such as people counting and facial recognition systems, remote patient monitoring, and robotics. In robotics, depth-sensing cameras enable machines to detect obstacles and navigate their environment more effectively.

In summary, depth-sensing cameras are crucial for machines to gain a 3D perspective of their surroundings and enable them to operate with greater autonomy and accuracy. With advancements in machine learning and artificial intelligence, depth-sensing technology will continue to play a vital role in the development of autonomous machines and systems.

Unleash Special Effects: Camera Raw Power

You may want to see also

Depth of field (DoF) is the zone within a photo that appears acceptably sharp and in focus

Depth of field (DoF) is a fundamental concept in photography that can elevate your images from snapshots to artistic masterpieces. It is the zone within a photo that appears acceptably sharp and in focus. In every image, there is a point of focus where you focus your lens. However, there is also an area in front of and behind this point that remains sharp, and this area is determined by the depth of field.

The size of the zone of sharpness, or depth of field, will vary across photos due to factors such as lens aperture setting, distance to the subject, and focal length. By adjusting your camera settings and composition, you can control how much of your image is sharp and how much is blurry. This creative control is essential to expanding your photographic horizons and capturing stunning artistic images.

Deep vs. Shallow Depth of Field:

When a photo has a large zone of acceptable sharpness, it is said to have a deep depth of field. Landscapes, for example, often utilise deep DoF to showcase every detail in the scene, from the foreground to the background. On the other hand, images with very small zones of focus are said to have a shallow depth of field. Shallow DoF is commonly used in portrait photography to emphasise the subject while blurring the background, preventing any distractions.

Factors Affecting Depth of Field:

Three main factors influence the depth of field in a photo:

- Aperture (f-stop): Aperture refers to the hole in your lens that lets light into the camera. Larger apertures (smaller f-stop numbers) result in shallower depth of field, while smaller apertures (larger f-stop numbers) produce deeper depth of field.

- Distance to the subject: The closer your subject is to the camera, the shallower the depth of field becomes. Moving farther away from your subject will increase the depth of field.

- Focal length of the lens: Longer focal lengths tend to produce shallower depth of field effects, while shorter focal lengths yield deeper depth of field. However, this relationship only holds true if you maintain the same magnification of your subject by adjusting the camera-subject distance accordingly.

By understanding these factors and how they interact, photographers can master the art of depth of field to achieve their desired effects in their images.

Is Your SQ8 Camera Charged? Check This Way

You may want to see also

Frequently asked questions

Depth mode in a camera refers to the camera's ability to sense and calculate the distance between the camera and the subject of the image. This is usually measured with lasers or LEDs.

There are several depth-sensing technologies available, including Time-of-Flight (ToF), Structured Light Cameras, Stereo Depth Cameras, and LiDAR. Each technology has its advantages and disadvantages, and the choice depends on the specific application.

Depth mode allows for more creative control over the image, enabling photographers to achieve a shallow or deep depth of field. It enhances the artistic value of the photograph by emphasising the subject and blurring the background, or vice versa. Depth mode also assists in applications such as facial recognition, robotics, and autonomous vehicles.