Camera calibration is a critical process in computer vision that enables the recovery of three-dimensional (3D) structures from 2D images. It involves determining the internal and external parameters of a camera, allowing the mapping of pixels in an image to their corresponding points in the real world. This process is essential for various applications such as 3D reconstruction, object tracking, augmented reality, and image analysis. Accurate camera calibration ensures precise measurements, reliable analysis, and enhances the performance of computer vision algorithms.

The camera calibration process typically includes the use of a calibration pattern or template, such as a checkerboard, with known dimensions. By capturing images of this pattern, the intrinsic and extrinsic parameters of the camera can be estimated. Intrinsic parameters refer to the focal length, optical centre, and lens distortion coefficients, while extrinsic parameters describe the orientation and location of the camera.

Camera calibration plays a vital role in bridging the gap between images and real-world coordinates, allowing computer vision systems to extract meaningful insights from images. It helps correct distortions introduced by camera design and lens systems, ensuring that AI models accurately interpret visual information.

What You'll Learn

Correcting lens distortion

To correct lens distortion, three fundamental steps are necessary:

- Calibrate the camera to obtain intrinsic camera parameters, including lens distortion characteristics.

- Fine-tune the camera matrix to control the percentage of undesired pixels in the undistorted image.

- Use the refined camera matrix to remove distortion from the image.

Software such as Adobe Lightroom and Photoshop, as well as OpenCV and MATLAB, offer tools to correct lens distortion. In Lightroom, the Lens Corrections tab allows for automatic and manual adjustments to correct barrel and pincushion distortion. Photoshop's Lens Correction filter can fix these distortions and includes a grid to help adjust the image. OpenCV's findChessboardCorners() function automatically detects chessboard patterns for camera calibration.

It is worth noting that some lenses, like fisheye lenses, are designed to distort images for creative purposes. However, for accurate measurements and analysis, correcting lens distortion is crucial.

Activating Standby Mode: A Simple Guide for Your Camera

You may want to see also

Mapping pixels to real-world coordinates

Camera calibration is a crucial process in computer vision, enabling accurate measurements and analysis for various applications. One of the key aspects of camera calibration is mapping pixels to real-world coordinates, which involves determining the intrinsic and extrinsic parameters of the camera.

The intrinsic parameters refer to the internal geometry of the camera, including focal length, optical center or principal point, radial distortion coefficients, skew coefficient, and pixel skew. These parameters allow us to map between pixel coordinates and camera coordinates within the image frame. For example, the focal length determines the size of an object in the image, while the optical center serves as a reference point for measurements.

On the other hand, extrinsic parameters describe the orientation and location of the camera in the real world. This includes the rotation (R) and translation (t) of the camera with respect to a chosen world coordinate system. By knowing the extrinsic parameters, we can transform world points into the camera's coordinate system.

To map pixels to real-world coordinates, we use the camera matrix, which combines intrinsic and extrinsic parameters. The camera matrix is a 3x4 or 4x3 matrix that represents the specifications of the camera. It maps the 3D world scene into the 2D image plane, allowing us to determine the real-world coordinates of objects captured in an image.

The process of camera calibration involves capturing images of a calibration pattern, such as a checkerboard or a grid, with known dimensions. By extracting feature points from these images and matching them to corresponding 3D world points, we can solve for the camera matrix and distortion coefficients. This allows us to correct for lens distortion and accurately map pixels to their corresponding real-world locations.

The accuracy of camera calibration is crucial, especially for applications such as augmented reality, robotics, and 3D reconstruction. By properly calibrating the camera and mapping pixels to real-world coordinates, we can ensure reliable measurements, object tracking, and accurate analysis in computer vision tasks.

Axis Cameras: Where Are They Manufactured?

You may want to see also

Determining internal camera parameters

Understanding Internal Parameters:

The first step is to understand the different internal or intrinsic parameters that need to be determined. These include the focal length, optical centre or principal point, radial distortion coefficients, skew coefficient, and scale factor. Each of these parameters plays a role in describing how the camera captures and represents the 3D world in 2D images.

Choosing a Calibration Model:

The next step is to choose an appropriate calibration model. The most commonly used model is the pinhole camera model, which assumes a camera without a lens. This model serves as a basic representation of the image formation process and can be adjusted to account for lens distortion in real cameras. Other models, like the fisheye camera model, are also available for cameras with specific characteristics, such as a wide field of view.

Setting Up a Calibration Pattern:

To determine the internal parameters, you will need to set up a calibration pattern with known dimensions. A common choice is a checkerboard or chessboard pattern, which provides easily identifiable points for calibration. The pattern should be placed in the camera's field of view, ensuring that it is parallel to the camera and centred in the image.

Capturing Calibration Images:

Take multiple images of the calibration pattern from different positions and orientations. Ensure that the entire pattern is visible in the images and that the camera captures the pattern's corners or dots accurately. These images will be used to extract feature points and establish correspondences between the 3D world and the 2D image.

Solving for Internal Parameters:

Use the captured images and their corresponding 2D and 3D points to solve for the internal camera parameters. This typically involves using calibration software or mathematical models, such as Zhang's method, to estimate the parameters. The software or model will utilise the correspondences to calculate values for parameters like focal length, principal point, and distortion coefficients.

Evaluating Accuracy:

After determining the internal parameters, it is essential to evaluate the accuracy of the calibration. This can be done by plotting the relative locations of the camera and the calibration pattern, calculating reprojection errors, or using a camera calibrator tool to assess the accuracy of the estimated parameters.

By following these steps and fine-tuning the calibration process, you can optimise the determination of internal camera parameters, leading to improved camera calibration for computer vision tasks.

Leatherette Camera: What's It Really Made Of?

You may want to see also

Understanding camera models

Camera models are an essential aspect of understanding camera calibration in computer vision. A camera model mathematically describes the relationship between a point in 3D space and its projection onto the image plane. The most well-known model is the pinhole camera model, which assumes an ideal camera without lenses, where light rays pass through an aperture and create an inverted image. This model serves as a basic representation and does not account for lens distortions or blurring caused by finite-sized apertures.

In the pinhole camera model, the camera aperture is a point, and the image plane is located parallel to the X1 and X2 axes, at a distance 'f' from the origin in the negative direction of the X3 axis. The projection of a 3D point onto the image plane results in a 2D image with coordinates that depend on the original 3D point's location. This model provides a first-order approximation of mapping a 3D scene to a 2D image and is often used in computer vision and graphics.

However, real-world cameras introduce distortions and blurring due to lenses and finite-sized apertures. To address this, camera calibration aims to determine the intrinsic and extrinsic parameters of a camera. Intrinsic parameters include focal length, optical centre, and radial distortion coefficients, while extrinsic parameters describe the camera's orientation and location in 3D space.

The process of camera calibration involves capturing images of a calibration pattern, such as a checkerboard, and using these images to extract feature points and match them to corresponding 3D world points. By solving calibration equations, we can estimate the camera parameters, correct distortions, and ensure accurate measurements for various applications.

Another important concept in camera models is the camera matrix, which represents the specifications of the pinhole camera. This 4-by-3 matrix maps the 3D world scene into the image plane, taking into account both extrinsic and intrinsic parameters. The extrinsic parameters define the camera's position in 3D space, while the intrinsic parameters represent the optical centre and focal length.

In summary, understanding camera models, particularly the pinhole camera model, is crucial for camera calibration in computer vision. By mathematically describing the relationship between 3D points and their 2D projections, we can calibrate cameras to correct distortions and enable accurate measurements for a variety of applications.

Surveillance Camera Streams: Dark Web's Disturbing Entertainment

You may want to see also

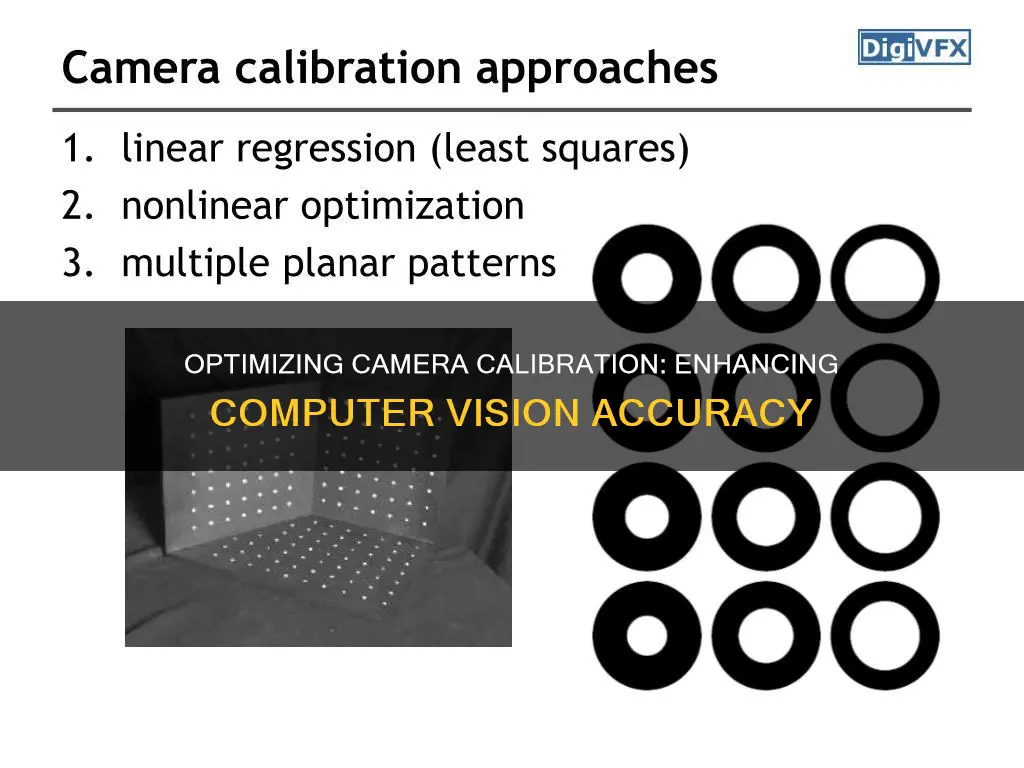

Calibration techniques

One commonly used calibration technique is the pinhole camera model, which serves as a basic representation of a camera without a lens. Light rays pass through a small aperture and form an inverted image on the opposite side of the camera. The pinhole camera parameters are represented by a camera matrix that maps the 3D world scene onto the 2D image plane. However, this model does not account for lens distortion.

To address lens distortion, the camera model is combined with radial and tangential distortion coefficients. Radial distortion occurs when light rays bend more at the edges of a lens than at its optical centre, causing straight lines to appear curved in the image. Tangential distortion, on the other hand, happens when the lens and image plane are not parallel, resulting in an image that appears slanted and stretched. By incorporating these distortion coefficients, the camera model can more accurately represent the relationship between the camera image and the real world.

Another technique used for camera calibration is the fisheye camera model, which is suitable for cameras with a wide field of view, up to 195 degrees. This model accounts for the high distortion produced by fisheye lenses, which cannot be adequately described by the pinhole model.

In practice, camera calibration often involves capturing images of a calibration pattern, such as a checkerboard or a reference array of markers, with known dimensions. By extracting feature points from these images and matching them to corresponding 3D world points, the system can solve for the camera parameters. This process allows for the estimation of intrinsic parameters like focal length and principal point, as well as extrinsic parameters that describe the camera's orientation and location.

Additionally, there are toolkits available, such as Matlab and OpenCV, that provide functions and algorithms to facilitate camera calibration. These toolkits offer features like automatic detection of calibration patterns, making the process more accessible and efficient.

Surveillance in Rest Areas: Are You Being Watched?

You may want to see also

Frequently asked questions

Camera calibration is a fundamental process in computer vision that involves determining the internal and external parameters of a camera to correct distortions and ensure accurate measurements. This allows us to map pixels in an image to their corresponding points in the real world, enabling various applications such as 3D reconstruction, object tracking, and augmented reality.

Camera calibration typically involves capturing images of a calibration pattern, such as a checkerboard, with known dimensions. By extracting feature points and matching them to corresponding 3D world points, we can solve for the camera parameters, including focal length, principal point, and lens distortion coefficients.

Camera calibration improves the performance of computer vision algorithms by providing undistorted images. It enables accurate measurements, object recognition, and mapping in computer vision applications. Calibration also helps AI models in computer vision to perceive the world more accurately through 2D representations, enhancing their ability to extract meaningful insights from images.