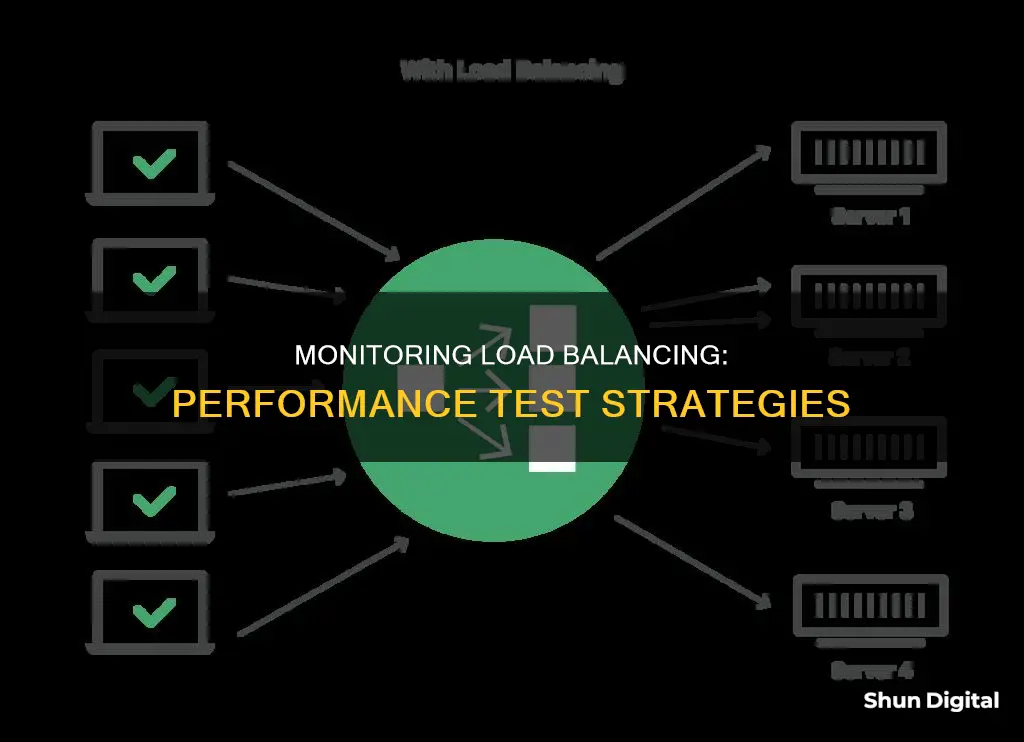

Load balancing is a crucial aspect of ensuring optimal performance in highly demanded applications. By distributing incoming user requests across multiple servers, load balancing prevents server overload and enhances application performance. To effectively monitor load balancing during performance test executions, it is essential to establish a baseline for performance measurements. This involves gathering data on the system during a healthy period to serve as a reference point for comparison. Various tools are available, such as the Server Performance Advisor (SPA) and the Performance Monitor, to track performance characteristics and trends. When testing load balancing, it is important to measure the backend service's performance independently from the load balancer. This can be achieved by assessing dimensions such as request throughput, concurrency, connection throughput, latency, and error rate. Additionally, internal service metrics like system resource utilization and the use of bounded resources play a vital role in understanding the server's health under load. Load testing tools enable the measurement of different scaling dimensions, aiding in capacity planning and overload testing. By following these guidelines and utilizing the appropriate tools, developers can ensure effective load balancing and maintain high-performance standards for their applications.

| Characteristics | Values |

|---|---|

| Number of requests served per second | Request throughput |

| Number of requests processed concurrently | Request concurrency |

| Number of connections initiated by clients per second | Connection throughput |

| Number of client connections processed concurrently | Connection concurrency |

| Total elapsed time between the beginning of the request and the end of the response | Request latency |

| How often requests cause errors, such as HTTP 5xx errors and prematurely closed connections | Error rate |

| System resources, such as CPU, RAM, and file handles (sockets) | Use of system resources |

| Non-system resources that could be depleted under load, such as at the application layer | Use of other bounded resources |

| Behaviour under overload | Resilience against resource exhaustion |

| Performance under low load vs high load | Response time |

| Performance characteristics of a new system | Server Performance Advisor |

| Performance trends of an active system | Server Performance Advisor |

| Performance counters | Performance Monitor |

What You'll Learn

Use the Server Performance Advisor to determine performance characteristics

The Server Performance Advisor (SPA) is a tool for optimising server performance on Windows Server 2008 and Windows Server 2012. It collects performance data from one or more servers and can be used to quickly determine the performance characteristics of a new system and to track the performance trends of an active system.

SPA is composed of two parts: the SPA framework, which collects performance data, and the Advisor Packs (APs), which analyse the data. APs contain a set of performance rules that define what data gets collected from the server and are used to assess the server's behaviour. For example, if data from a server shows a packet retransmit rate of more than 10% for any network adapter, and that network adapter has a lot of send activity, a warning is logged in a report.

SPA can be used to collect metrics and diagnose performance issues on Windows Server 2012, Windows Server 2008 R2, and Windows Server 2008 for up to 100 servers, without adding software agents or reconfiguring production servers. It generates comprehensive performance reports and historical charts with recommendations.

To use SPA, you need to first set up a data collection session. This involves choosing which APs you want to use depending on the target server role. APs are imported into SPA to help define what data is collected and later used to assess the servers' performance. Core OS AP is a generic AP that covers fundamentals like I/O and resource utilisation, while others are role-specific.

Next, you define how long you want to collect data for and choose whether you want a one-time collection or to collect data at regular intervals. Keep in mind that longer collections will result in more data to process.

During data collection, SPA collects data from various sources, including Event Tracing for Windows (ETW) events, Windows Management Infrastructure (WMI), performance counters, configuration files, and registry keys. This data is then saved to a pre-defined file share, which can be specified by the administrator.

Once the data collection is complete, SPA stores the data in a SQL database, allowing for historical charting to help with trending performance behaviours over time. Finally, SPA generates a performance report, summarising the findings, identifying issues, and providing possible mitigation strategies.

SPA is a valuable tool for IT administrators and system administrators, helping them optimise server performance and troubleshoot issues. By following the steps outlined above, users can effectively utilise SPA to determine the performance characteristics of their servers.

Micca Origen G2: Hooking Up to Studio Monitors

You may want to see also

Measure request throughput

Measuring request throughput is a critical step in load testing to ensure application performance and stability. Throughput is a measure of how many requests your application can handle over a period of time and is often measured in transactions per second (TPS). It is a key performance indicator (KPI) in performance testing, helping to evaluate the performance and scalability of a system.

To measure request throughput, you can use tools such as ApacheBench (ab), which is a part of the standard Apache source distribution. ab can generate a single-threaded load by sending a specified number of concurrent requests to a server. For example, the following command will send a total of 500 requests to the specified environment, divided into packs of 10 concurrent requests:

Ab -n 500 -c 10 -g res1.tsv {URL_to_your_env}

Here, `-n` denotes the total number of requests, `-c` specifies the number of concurrent requests, and `-g` is used to specify the output file. The results will be stored in a tab-separated values (TSV) file, which can be opened in a spreadsheet program for analysis.

Another tool you can use is Nighthawk, an open-source tool developed in coordination with the Envoy project. Nighthawk can generate client load, visualize benchmarks, and measure server performance for most load-testing scenarios of HTTPS services. An example command to test HTTP/1 is as follows:

Nighthawk_client http://10.20.30.40:80 \ --duration 600 --open-loop --no-default-failure-predicates \ --protocol http1 --request-body-size 5000 \ --concurrency 16 --rps 500 --connections 200

In this command, `--duration` specifies the total test run time in seconds, `--request-body-size` is the size of the POST payload in each request, `--concurrency` is the total number of concurrent event loops, `rps` is the target rate of requests per second per event loop, and `--connections` is the number of concurrent connections per event loop.

By measuring request throughput, you can gain insights into your application's performance and scalability, ensuring it can handle the expected load and providing a smooth and efficient user experience.

Minimize Power Consumption with Multiple Monitors: CPU Efficiency Tips

You may want to see also

Measure request concurrency

Measuring request concurrency is an important aspect of load testing, and it refers to the number of requests processed concurrently. This metric is crucial for understanding the performance of your application and ensuring optimal user experience.

When measuring request concurrency, it's essential to consider the impact of concurrent users on your application's performance. Concurrent usage occurs when multiple users access the same application simultaneously to execute separate transactions. This can put a strain on your system, especially if the number of concurrent users is high.

To calculate the number of concurrent users, you can use the following basic formula:

> Per Day visits / Peak hours * (60/Average length per visit in minutes) = Concurrent users

Additionally, it's crucial to understand the concept of load balancing. Load balancing is a technique used to distribute network traffic across multiple servers, ensuring efficient handling of user requests. By employing load balancing, you can enhance application responsiveness and availability. One common approach is the Round Robin method, where the load balancer allocates a request to the first server on the list and then pushes that server to the bottom of the list for the next request.

When implementing load balancing, it's important to consider factors such as the number of concurrent users, the type of data being accessed, and the number of servers involved. By balancing the load effectively, you can improve your application's performance and ensure that each user has an equal chance of receiving a timely response.

Furthermore, it's crucial to ensure that your application can handle failures. If one server goes down, there should be another available to take its place to maintain the performance and availability of your application.

In summary, by measuring request concurrency and effectively implementing load balancing, you can optimize your application's performance during concurrent usage. This will result in an enhanced user experience, ensuring that user requests are processed smoothly and efficiently.

Finding Your Monitor's Color Gamut: A Step-by-Step Guide

You may want to see also

Measure connection throughput

Measuring throughput is a crucial aspect of load balancing, as it helps determine the effectiveness of the load distribution across servers. Throughput refers to the number of successful requests executed per unit of time. A high throughput indicates that the load balancer is functioning optimally, resulting in peak performance across the entire infrastructure.

To measure throughput, you can use various tools and techniques. One common approach is to utilise performance monitoring tools such as the Server Performance Advisor (SPA) provided by Microsoft or the built-in Performance Monitor tool in Windows operating systems. These tools allow you to track metrics such as the number of active connections, request counts, and response times, which collectively provide insights into the throughput of your system.

Additionally, you can perform load testing to assess the throughput of your load-balancing setup. This involves simulating a high volume of requests and measuring how well the system handles the load. By using tools like ApacheBench (ab), you can generate a controlled load and analyse the system's response.

- Establish Baselines: Before conducting any tests, it's essential to establish baselines by gathering data on the system's performance during a known healthy period. This data will serve as a reference point for comparison during load testing.

- Select Appropriate Metrics: Choose the right metrics to monitor, such as active connections, request counts, latency, error rates, and response times. These metrics will provide insights into the load balancer's performance and help identify potential bottlenecks.

- Utilise Performance Monitoring Tools: Use performance monitoring tools like SPA or the Performance Monitor in Windows. These tools can track various metrics in real time and provide visual representations of the system's performance, helping you identify areas that need improvement.

- Conduct Load Testing: Perform controlled load tests using tools like ApacheBench. Send a defined number of concurrent requests to the load balancer and measure the system's response. Repeat the tests with different load levels to understand how the system behaves under varying loads.

- Analyse Results: Compare the results obtained during load testing with the established baselines. Look for any deviations or bottlenecks that may impact the system's performance. Pay close attention to metrics like request throughput, response times, and error rates, as they indicate the effectiveness of the load distribution.

- Optimise and Refine: Use the insights gained from the load testing to optimise your load-balancing configuration. Adjust the settings, algorithms, or server resources to improve the throughput and overall performance of the system.

- Continuous Monitoring: Load balancing is an ongoing process, and it's essential to continuously monitor the system's performance. Regularly review the metrics and make adjustments as necessary to ensure optimal throughput and system health.

By following these steps and continuously measuring connection throughput, you can fine-tune your load-balancing setup to handle high volumes of traffic efficiently, ensuring a seamless and reliable user experience.

Troubleshooting Guide for ASUS HDMI Monitor Issues

You may want to see also

Measure connection concurrency

Measuring connection concurrency is a critical aspect of load balancing during performance test executions. It involves evaluating the number of client connections that are actively processed by the server simultaneously. This metric is essential because it directly impacts the user experience, as a higher number of concurrent connections can lead to slower response times and even connection rejections.

To effectively monitor connection concurrency, consider the following strategies:

- Baseline Establishment: Before initiating any load testing, it is crucial to establish a baseline by gathering data on the system's performance during a known healthy period. This baseline will serve as a reference point for comparison during load testing.

- Load Testing Tools: Utilize load testing tools such as ApacheBench (ab) or Nighthawk to generate controlled load tests. These tools can simulate production load by sending requests at a steady rate, helping you understand how the server handles varying levels of connection concurrency.

- Performance Monitoring: Employ performance monitoring tools like the Server Performance Advisor (SPA) or the Performance Monitor to track the server's behaviour during load testing. These tools provide insights into how the system handles concurrent connections, allowing you to identify potential bottlenecks or performance issues.

- Capacity Planning: Load testing helps in capacity planning by determining the load threshold for acceptable performance. Measure the request rate at which the server can maintain a specific latency level (e.g., 99th percentile latency) and ensure that system resource utilisation remains optimal.

- Error Rate Evaluation: Monitor the error rate during load testing. Pay attention to errors such as HTTP 5xx errors and prematurely closed connections, as they indicate that the server is unable to handle the load effectively, potentially due to high connection concurrency.

- Resource Utilisation: Evaluate the utilisation of system resources like CPU, RAM, and file handles during load testing. Applications may experience reduced performance or even crashes when these resources are exhausted. Therefore, it is crucial to understand the availability of resources under heavy load.

- Connection Reuse: Consider that HTTPS clients often reuse connections for multiple requests. This can impact the number of active connections and should be factored into your connection concurrency measurements and capacity planning.

- Health Checks: Implement "health checks" by frequently attempting to connect to backend servers. If a server fails a health check, automatically remove it from the pool and redirect traffic to healthy servers. This ensures that user requests are directed only to responsive and available servers.

- Load Balancer Algorithms: Familiarise yourself with different load balancer algorithms, such as Round Robin, Least Connections, Least Response Time, and Least Bandwidth Method. Each algorithm uses a specific approach to distribute traffic among servers, and understanding them can help you make informed decisions about connection concurrency management.

- Redundancy and Failover: Ensure redundancy by deploying multiple load balancers in a cluster to avoid a single point of failure. If the primary load balancer fails, a secondary load balancer can take over, minimising the impact on users and maintaining connection concurrency.

By following these strategies and continuously monitoring connection concurrency, you can optimise the performance of your application during load testing and ensure a seamless user experience, even under high load conditions.

Detaching the Monitor: Asus Zenbook's Easy Separation

You may want to see also

Frequently asked questions

Load balancing is a process of distributing incoming requests across multiple servers to avoid overloading a single server. This helps improve application performance and ensures high availability by reducing the risk of app inaccessibility.

You can use tools like LoadComplete to run load tests against your load-balanced servers. In LoadComplete, you can assign IP addresses to virtual users who will simulate requests from those addresses. This helps the load balancer distinguish them as different clients and route them to separate destination servers.

Some important metrics to monitor during load testing include request throughput, request concurrency, connection throughput, connection concurrency, request latency, and error rate. Additionally, it is crucial to track the utilisation of system resources such as CPU, RAM, and file handles.

It is recommended to create small-scale tests to focus on the performance limits of the server. Choose open-loop load patterns to simulate production load accurately. Use recommended load generators like Nighthawk or Apache Benchmark (ab) for more comprehensive testing.

By comparing the performance of your application with and without a load balancer, you can determine the effectiveness of load balancing. Graphing tools like gnuplot can help visualise the results, showing how your application handles increasing load and whether the load balancer is distributing requests evenly.