Linux is a highly flexible, customisable, and scalable platform. However, monitoring and debugging Linux performance can be challenging for sysadmins. There are several tools available to help monitor application performance in Linux, ranging from command-line utilities to comprehensive monitoring software. Here are some of the best tools to monitor application performance in Linux:

- Top: This command displays real-time active processes based on CPU time consumption and provides insights into CPU utilisation, process details, memory utilisation, and more.

- TCPdump: A network packet analyser that captures and analyses packets transferred/received over the network.

- Netstat: A popular command to print network connections, interface statistics, and troubleshoot network-related issues.

- Htop: An alternative to the top command that offers an interactive user interface and allows you to monitor system processes and storage.

- Acct or psacct: Monitors user and application activity in a multi-user environment, tracking resource consumption and providing insights into running processes.

- Iotop: A Python-based utility that monitors input/output utilisation of system threads and processes, helping identify processes with high disk or memory usage.

- Sematext Server Monitoring: A tool that provides real-time visibility into the performance of Linux servers, collecting and reporting key metrics such as CPU, memory, disk usage, processes, network, and load.

- Zabbix: An open-source monitoring solution that collects performance metrics such as CPU usage, network bandwidth, and available disk space, with customisable alerts and notifications.

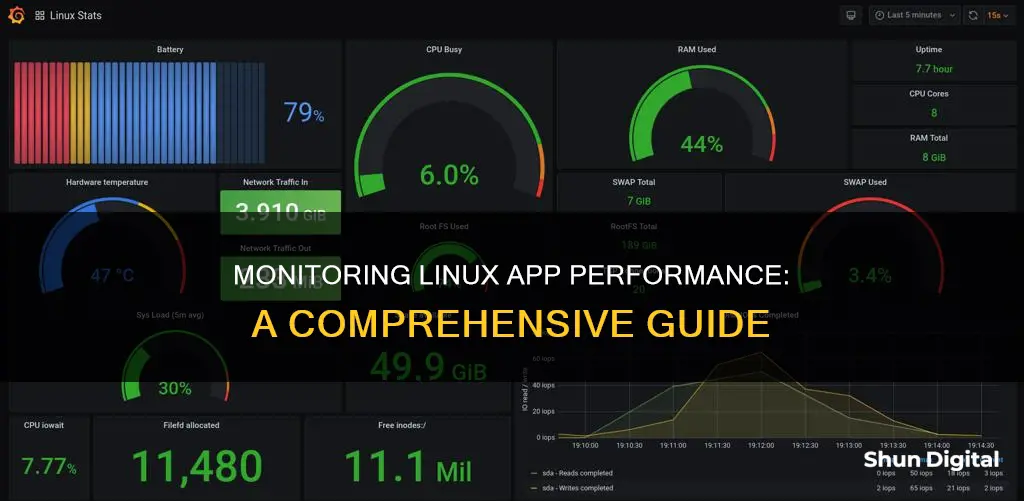

- Prometheus and Grafana: Used together as an open-source monitoring and alerting solution, Prometheus collects hardware and OS metrics, while Grafana provides data visualisation through dashboards.

- Nagios Core: An open-source monitoring and alerting tool that can be extended with custom plugins, offering flexible Linux server monitoring, including OS metrics, CPU, memory, disk usage, and more.

- Elastic Stack (ELK Stack): A popular tool for Linux performance monitoring that includes Elasticsearch, Logstash, Kibana, and Beats, allowing you to monitor system and service metrics, visualise data, and set up alerts.

- Datadog: A SaaS-based monitoring solution that collects standard performance metrics and provides interactive dashboards and alerts for Linux servers.

- ManageEngine OpManager: Offers network and performance monitoring for Linux servers, tracking CPU, memory, disk, server availability, and network traffic, with customisable dashboards and alerts.

- SolarWinds Server & Application Monitor (SAM): Provides automated tools to track application and Linux server performance, including CPU, memory, disk, networking, and process utilisation, with built-in monitoring templates.

- Site24x7: A cloud-based monitoring software that collects performance metrics, monitors services and processes, and provides powerful dashboards and alerts for Linux servers.

- PRTG Network Monitor: Uses sensors to monitor specific metrics in your network, offering built-in support for customised sensors and out-of-the-box auto-discovery, with a dashboard for real-time visibility.

| Characteristics | Values |

|---|---|

| Monitored attributes | Thread count, Process count, Major page faults, Context Switches, Server Time, Server Uptime, CPU utilization, System load, Memory Utilization, Process details, Disk behavior, Inode numbers, Cron jobs, Network interfaces, Hardware metrics |

| Supported distributions | Red Hat Linux, Redhat Enterprise Linux, Mandriva, Fedora, SuSe, Debian, Ubuntu, CentOS, Oracle Linux, IBM Cloud Linux, Microsoft Azure Linux, Google Cloud Platform (GCP) Linux, and more |

| Tools | top, lsof, pidstat, ps, tcpdump, iostat, netstat, free, sar, ipcs, ioping, mpstat, vmstat, pmap, ntop, w, uptime, proc, KDE System Guard, GNOME System Monitor, Conky, Cacti, vnstat, htop, ss, Nagios, Zabbix, Prometheus, Grafana, Nagios Core, Elastic Stack, Datadog, ManageEngine OpManager, SolarWinds Server & Application Monitor (SAM), Site24x7, PRTG Network Monitor |

What You'll Learn

Monitor CPU usage

Monitoring CPU usage is essential for system administrators, developers, and users to ensure optimal performance of their Linux systems. By understanding CPU usage, you can identify performance bottlenecks, detect resource-intensive processes, and optimise system performance. Linux offers various tools and commands to monitor CPU activity and utilisation. Here are some methods to monitor CPU usage in Linux:

Top Command

The `top` command is one of the most commonly used methods to monitor CPU usage in Linux. It provides real-time information about system resource usage, including CPU utilisation. When executed, `top` displays a dynamic list of processes sorted by resource consumption, with CPU utilisation information prominently displayed. The `%CPU` column represents the percentage of CPU usage for each process, while the `%CPU(s)` line shows the overall CPU usage for all processes. The `top` command also offers interactive features, such as sending signals to processes (by pressing `k`) and viewing individual CPU core utilisation (by pressing `1').

Htop Command

The `htop` command is a user-friendly alternative to `top`, providing an interactive and visually appealing interface to monitor system resources, including CPU usage. It displays real-time information about system resources, with CPU utilisation shown in the header section. `htop` represents CPU usage graphically, displaying a bar for each CPU core, with the length of the bar indicating the utilisation percentage. It also provides detailed CPU usage for each process, allowing you to navigate with arrow keys and access additional options with F keys.

Mpstat Command

The `mpstat` command is part of the sysstat software bundle and is commonly available on Linux distributions. It provides an overview of CPU usage, including average values since the system boot. By default, `mpstat` displays overall CPU usage across all cores, but you can specify the `-P ALL` option to view individual CPU core statistics and identify any imbalances in usage. Additionally, the `-I ALL` option displays interrupt statistics related to CPU usage, providing insights into interrupt-driven activity contributing to CPU utilisation.

Sar Command

The `sar` command is a versatile tool for managing system resources, including CPU usage. While it covers various system aspects, you can use the `-u` option to specifically track CPU performance. For example, the command `sar -u 5` will display CPU usage every 5 seconds. To exit the command and stop monitoring, press `Ctrl+C`.

Iostat Command

The `iostat` command provides insights into CPU utilisation and input/output (I/O) load, including disk read/write activity. It displays average CPU usage since the last boot and offers additional options to customise the output. For example, to view CPU utilisation statistics at regular intervals, you can use the command `iostat -c 1` to refresh the CPU statistics every second.

In addition to these command-line tools, Linux also offers graphical utilities like GNOME System Monitor and KDE System Monitor, which provide visual representations of CPU performance through graphs and percentage breakdowns of individual cores. These tools allow for intuitive analysis of CPU usage and system performance.

Resetting Your ASUS Monitor: A Step-by-Step Guide

You may want to see also

Monitor memory usage

Memory usage monitoring is a crucial task for Linux system administrators and users who want to optimise their system's performance. There are several command-line tools available in Linux that allow you to monitor memory usage in real time. Here are some of the most useful ones:

- Cat /proc/meminfo: This command displays a virtual file that provides real-time information about the system's memory usage, including total usable RAM, free memory, cached memory, and the kernel's use of shared memory and buffers.

- Free: This command provides a user-friendly output of memory usage statistics, such as total, used, free, shared, buff/cache, and available memory. It also shows the amount of swap memory consumed and available.

- Vmstat: This command reports virtual memory statistics, giving an overview of processes, memory, paging, block IO, traps, and CPU activity.

- Top: An interactive tool that shows real-time information about system resource utilisation, including CPU and memory usage on a per-process basis. It also provides information on the number of users logged in, CPU utilisation/number of CPUs, and memory/system process swap.

- Htop: Similar to the top command, htop offers an improved user interface and additional features. It supports vertical and horizontal scrolling, colour-coding, and provides complete command lines for processes.

- Sar: A system activity reporter that monitors various aspects of system performance, including memory usage.

In addition to these command-line tools, Linux also offers graphical tools for monitoring memory usage, such as the System Monitor app and the GNOME System Monitor. These tools are useful for users who prefer a visual interface or are less comfortable with the command line.

Best Places to Buy Ultrawide Monitors: A Comprehensive Guide

You may want to see also

Monitor disk usage

Disk usage can be monitored using the `df` command, which stands for "disk filesystems". This utility prints a list of all the filesystems on your system, along with their size, usage, and where they are mounted. The `-h` flag can be used to display byte numbers in KB, MB, and GB, and the `-T` flag shows the type of filesystem. For example:

Bash

Df -hT

The `df` command can also be used to check the usage of a specific filesystem or mount, which is useful for quickly checking the root system:

Bash

Df /

To monitor disk usage over time and be alerted if usage reaches a certain threshold, a cron job can be set up to run the `df` command automatically and send a message if usage exceeds a specified value.

In addition to `df`, there are other commands and utilities available in Linux to monitor disk usage:

- `du`: used to view directory sizes.

- `ls`: used to view the contents of a directory, with the `-lh` flag to see file sizes.

- `find`: used to find all files in a directory tree and their sizes.

- `fdisk`: used to view and manage disk partitions.

- `sfdisk`: similar to `fdisk`, but can display partition sizes in MB and supports more partition tables.

- `cfdisk`: a command-line utility with a user interface for creating, editing, deleting, or modifying partitions.

- `parted`: used to create, edit, and view partitions.

- `pydf`: a Python command-line utility similar to `df`, but with coloured output.

GPS Ankle Monitors: Can They Hear You?

You may want to see also

Monitor network traffic

Monitoring network traffic is crucial for maintaining optimal performance, detecting security breaches, planning resource allocation, and ensuring compliance with regulatory requirements. Linux offers a range of tools and methods to effectively monitor network traffic. Here are some detailed instructions on how to monitor network traffic in a Linux environment:

Command-Line Tools:

- Tcpdump: A versatile command-line packet analyser that captures and displays detailed information about network packets. It allows for packet filtering based on source/destination IP addresses, ports, and protocols.

- Tshark: Part of the Wireshark suite, tshark offers advanced filtering and analysis capabilities, supporting various output formats and real-time packet capturing.

- Iftop: Displays real-time bandwidth usage on an interface, providing an intuitive view of network traffic at the individual connection level, including source/destination IP addresses, ports, and data transfer rates.

- Netstat: A popular command-line tool that prints network connections, interface statistics, and aids in troubleshooting network-related issues. It can display statistics for all protocols and the kernel routing table.

- Nethogs: Groups network traffic by process ID, allowing you to monitor bandwidth consumption of individual processes or applications. It provides an interactive display with information on PID, user, program, interface, and sent/received data.

- Nload: A command-line tool that sums up all network traffic on an interface, providing incoming and outgoing traffic details with basic statistics like average, minimum, and maximum rates.

- Speedometer: Similar to nload, speedometer doesn't differentiate traffic by socket or process. However, it offers more customisable displays, including graphs with weighted averages and simple averages of sampled data.

Graphical User Interface (GUI) Tools:

- Wireshark: A widely-used network protocol analyser with a rich graphical interface for capturing, filtering, and analysing network packets.

- Ntop: A web-based tool that provides real-time and historical traffic analysis with interactive charts, graphs, and tables for visualising network traffic patterns.

- Cacti: A complete network graphing solution that uses SNMP (Simple Network Management Protocol) to monitor and visualise network traffic, allowing custom graph creation.

Network Monitoring Frameworks:

- Nagios: An open-source monitoring tool for various network services, including traffic monitoring. It offers a flexible architecture with plugins for monitoring network devices, services, and performance, along with notification and alerting capabilities.

- Zabbix: A web-based framework that provides real-time monitoring of network devices, services, and performance. It includes customisable dashboards, event correlation, and proactive alerting.

Network Traffic Filtering and Analysis:

- BPF (Berkeley Packet Filter): A mechanism for filtering and capturing network packets based on specific criteria, such as IP addresses, ports, or protocols. BPF expressions can be used with tools like tcpdump and tshark for effective traffic filtering.

- Protocol-specific Analysis: Different protocols require unique analysis techniques. For example, HTTP traffic analysis can be performed using ngrep to capture and filter packets, allowing for the detection of anomalies and security vulnerabilities. DNS and SMTP traffic analysis can be achieved using tools like dnstop, tcpdump, or Wireshark.

Troubleshooting ASUS Monitor Audio Loss: Quick Fixes

You may want to see also

Monitor system logs

Monitoring system logs is crucial for troubleshooting, system maintenance, and ensuring optimal performance. Linux logs provide a timeline of events for the Linux operating system, applications, and system and are a valuable troubleshooting tool when issues arise.

Where are the logs?

Linux log files are stored in plain text and can be found in the /var/log directory and subdirectory. There are logs for everything: system, kernel, package managers, boot processes, Xorg, Apache, MySQL, etc.

How to view the logs

First, change to the /var/log directory using the command cd /var/log. Then, type ls to see the logs stored under this directory.

Using syslog

One of the most important logs to view is the syslog, which logs everything but auth-related messages. Issue the command vi syslog to view everything under syslog. This file can be quite long, so to go to the end, use Shift+G. You can also use dmesg | less to scroll through the output.

Using dmesg

The dmesg command prints the kernel ring buffer and sends you to the end of the file. From there, you can pipe the output to less to scroll through the output: dmesg | less. You can also view log entries for a specific facility, such as the user facility, with dmesg –facility=user.

Using tail

The tail command is handy for viewing log files as it outputs the last part of files. For example, tail /var/log/syslog will print out only the last few lines of the syslog file. You can also use tail -f /var/log/syslog to continue watching the log file and print out the next line written to the file. To escape the tail command, hit Ctrl+X.

You can also instruct tail to only follow a specific amount of lines. For example, to view only the last five lines written to syslog, use tail -f -n 5 /var/log/syslog. This will follow the input to syslog and only print out the most recent five lines. As soon as a new line is written, the oldest line will be removed.

Using Systemd

Systemd, a system and service manager for Linux, includes its logging system called journald. Logs are stored in /var/log/journal/ or in memory, depending on the configuration. To view logs collected by journald, use the journalctl command. Common commands include:

- Journalctl to view all logs

- Journalctl -u [service_name] to view logs specific to a particular service

- Journalctl -b to view logs from the current boot

- Journalctl –since “YYYY-MM-DD HH:MM:SS to filter logs by a specific time range

Troubleshooting G-Sync: Is Your Monitor Broken?

You may want to see also

Frequently asked questions

There are several tools available for monitoring application performance in Linux, including:

- Sematext's Server Monitoring Tool: This tool provides real-time visibility into the performance of your Linux servers by collecting and reporting key metrics such as CPU, memory, disk usage, and network health. It also offers automated discovery of services running on Linux servers and customizable alerts.

- Zabbix: An open-source monitoring solution that can be used to monitor Linux servers and collect performance metrics such as CPU usage, network bandwidth, and disk space. It offers out-of-the-box templates and supports automatic discovery of Linux servers.

- Prometheus and Grafana: Used together, these tools provide an open-source monitoring and alerting solution. Prometheus collects hardware and OS metrics from the Linux kernel and stores them as time-series data, while Grafana provides data visualization through dashboards.

- Nagios Core: An open-source monitoring and alerting tool that can be extended with custom plugins. It remotely executes plugins on your Linux server to collect comprehensive monitoring data, including OS metrics, CPU, memory, disk usage, and more.

- Elastic Stack (ELK Stack): A well-known tool for Linux performance monitoring that consists of Elasticsearch, Logstash, Kibana, and Beats. You can use Metricbeat to collect system and service metrics from your Linux servers and send them to the rest of the ELK Stack for analysis and visualization.

- Datadog: A SaaS-based monitoring solution that offers a Linux agent to collect performance metrics such as CPU and disk usage. It provides real-time monitoring, interactive dashboards, and automated alerts.

Some common command-line tools for monitoring Linux performance include:

- top: Lists real-time active processes based on CPU time consumption, updating every five seconds. It displays general information such as system uptime, load, RAM, and swap space, as well as process lists with PID, memory, and CPU/memory usage.

- tcpdump: A network troubleshooting utility that captures and analyzes TCP/IP packets transferred over the network. It offers various filters and flags to capture specific traffic and store it in pcap files.

- netstat: Provides detailed network configuration and troubleshooting information, including incoming/outgoing connections, interface statistics, listening/open ports, and routing tables.

- htop: A command-line utility that monitors system processes and storage, offering an interactive user interface. It allows you to scroll through processes, kill or reprioritize them, and supports mouse operations.

- acct or psacct: Monitors user and application activity in a multi-user environment, tracking the time duration of user access, commands used, and running processes.

- iotop: A Python-based utility that monitors input/output utilization of system threads and processes, helping to identify processes with high disk or memory usage.

Some key metrics to monitor for Linux performance include:

- CPU Utilization: Monitor individual core utilization and status, including run queue, blocked processes, user time, system time, I/O wait time, idle time, and interrupts per second.

- System Load: Track the number of jobs executed and their duration to identify workload that needs to be moved to other systems.

- Memory Utilization: Trace memory usage to determine server load and identify potential bottlenecks caused by insufficient memory.

- Disk Behavior: Monitor disk usage and I/O to prevent application performance issues and check for signs of hardware failure.

- Inode Usage: Linux systems use inode numbers to identify files/directories. Track inode usage to manage file and directory creation efficiently and prevent issues related to inode limits.

- Cron Jobs: Monitor cron jobs that schedule tasks like backups, emails, and status checks to ensure optimal performance and gain visibility into backend job execution.

Using a Linux monitoring tool offers several benefits, including:

- Proactive Issue Identification: Monitoring allows you to identify issues before they become critical problems, enabling you to optimize resource utilization and prevent performance degradation.

- System Health and Availability: Monitoring tools help track system health and availability, alerting you to service outages, performance issues, or security threats.

- Security: Monitoring system activity, logs, file integrity, and network traffic can help detect suspicious behavior and unauthorized access attempts, enabling you to take swift action to protect sensitive data.

- Historical Data for Troubleshooting: By collecting and analyzing historical data, you can establish performance baselines and pinpoint the root cause of issues when bugs arise.

When choosing a Linux monitoring tool, consider the following:

- Scalability: Ensure the tool can handle growing data volumes, server counts, and future growth.

- Features: Look for tools that offer real-time monitoring, specific metrics (CPU, memory, disk, etc.), alerting systems, visualizations, and collaboration features.

- Alerting Customization: Evaluate how customizable the alerts are, including the ability to define thresholds, notification methods (email, SMS, etc.), and escalation paths.

- Pricing: Consider the cost of the tool and whether it offers transparent pricing and overage protection.

- Ease of Use: Choose a tool with an intuitive interface, clear dashboards, and easy-to-understand reports to ensure a smooth user experience for your team.

- Integrations: Look for tools that integrate with your existing systems and services to provide a centralized monitoring platform.