Camera calibration is a fundamental task in computer vision, used to correct image distortions and estimate camera parameters. It involves determining factors such as focal length, principal point, and lens distortion coefficients. By calibrating a camera, we can relate image coordinates to real-world measurements accurately, enabling precise measurements, object recognition, and mapping in computer vision applications. This process is crucial for various applications, including 3D reconstruction, object tracking, augmented reality, and image analysis. The goal is to enhance the performance of computer vision algorithms by providing undistorted images and ensuring that image features align with the laws of projective geometry.

| Characteristics | Values |

|---|---|

| Purpose | To correct distortions and ensure accurate measurements in computer vision tasks |

| Applications | 3D reconstruction, object tracking, augmented reality, image analysis, machine vision, robotics, navigation systems, SLAM, visual odometry |

| Parameters | Intrinsics, extrinsics, and distortion coefficients |

| Calibration Method | Capturing images of a calibration pattern (e.g. checkerboard) with known dimensions to extract feature points and match them to corresponding 3D world points |

| Calibration Models | Pinhole camera model, fisheye camera model |

| Intrinsic Parameters | Focal length, optical center/principal point, radial distortion coefficients, skew coefficient |

| Extrinsic Parameters | Orientation and location of the camera, rotation, translation |

| Distortion Types | Radial distortion, tangential distortion |

What You'll Learn

- Camera calibration ensures accurate measurements and reliable analysis for applications like 3D reconstruction and object tracking

- It involves determining parameters like focal length, principal point, and lens distortion coefficients

- Calibration is essential to correct lens distortions and enable precise measurements in computer vision tasks

- Camera calibration is crucial for robotics applications, where pixel mapping in images is necessary for physical geometry mapping

- Calibration helps improve the performance of computer vision algorithms by providing undistorted images and adhering to projective geometry laws

Camera calibration ensures accurate measurements and reliable analysis for applications like 3D reconstruction and object tracking

Camera calibration is a fundamental process in computer vision, playing a crucial role in ensuring accurate measurements and reliable analysis. By correcting distortions and estimating camera parameters, it enables a range of applications, including 3D reconstruction and object tracking.

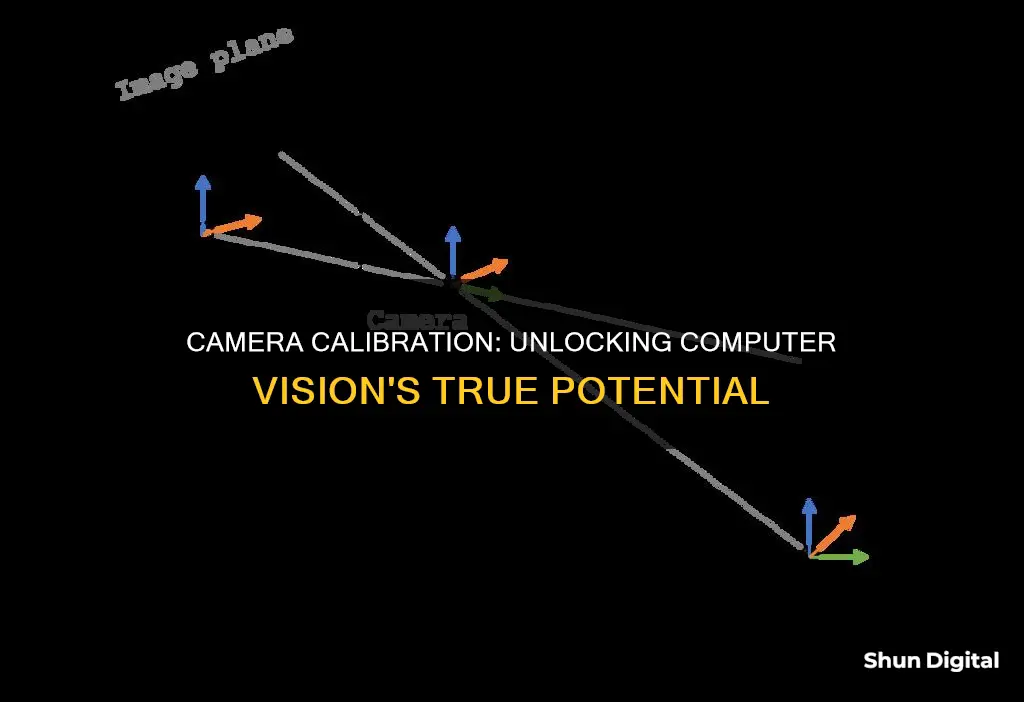

At its core, camera calibration involves determining specific camera parameters to facilitate precise operations. This process establishes an accurate relationship between 3D points in the real world and their corresponding 2D projections in captured images. The calibration technique is based on obtaining intrinsic and extrinsic camera parameters. Intrinsic parameters, such as optical centre, focal length, and lens distortion coefficients, enable mapping between pixel and camera coordinates. On the other hand, extrinsic parameters describe the orientation and location of the camera, including its rotation and translation relative to a world coordinate system.

The importance of camera calibration becomes evident when considering its impact on the performance of computer vision algorithms. In fields like stereo vision, 3D reconstruction, and object tracking, undistorted images and accurate measurements are essential. Without proper calibration, distortions introduced by camera lenses can hinder the efficacy of these algorithms.

To calibrate a camera, images of a calibration pattern with known dimensions are typically captured. Feature points are extracted from these images and matched with corresponding 3D world points. Solving calibration equations then allows for the estimation of camera parameters. The pinhole camera model, a basic camera model without a lens, serves as a foundation for understanding the imaging process. However, the introduction of lenses brings about distortions that need to be addressed through calibration.

The process of camera calibration is not without its challenges. Real-world calibration can be tricky because the models used are often simplified and may not cover all lens types. Additionally, collecting accurate calibration data and solving the optimisation problem require careful consideration to ensure reliable results.

In conclusion, camera calibration is vital for achieving accurate measurements and reliable analysis in computer vision applications. By addressing distortions and estimating camera parameters, it enables applications like 3D reconstruction and object tracking to perform effectively, even with the complexities introduced by different lens types and real-world conditions.

Best Cameras Compatible with the Sony NP-BX1 Battery

You may want to see also

It involves determining parameters like focal length, principal point, and lens distortion coefficients

Camera calibration is a fundamental task in computer vision that involves determining parameters such as focal length, principal point, and lens distortion coefficients. This process is crucial for ensuring accurate measurements and reliable analysis in various applications, including 3D reconstruction, object tracking, and image analysis.

The focal length is a critical parameter in camera calibration, representing the distance between the pinhole and the image plane. It is typically measured in pixels, and both the x and y directions should have the same value in an ideal pinhole camera. However, in practice, flaws in the digital camera sensor, post-processing, lens distortion, and other factors can cause the focal lengths in the x and y directions to differ.

The principal point, also known as the optical centre, is another important parameter. It is defined as the intersection of the camera's "principal axis" with the image plane. The principal axis is the line perpendicular to the image plane that passes through the pinhole. The principal point offset refers to the location of the principal point relative to the origin of the film or image sensor.

Lens distortion coefficients are also crucial in camera calibration. Lenses can introduce radial and tangential distortion, causing straight lines in the real world to appear curved in the image. Radial distortion occurs when light rays bend more at the edges of a lens than at its centre, and it is more pronounced in smaller lenses. Tangential distortion, on the other hand, occurs when the lens and image plane are not parallel. By accounting for these distortion coefficients, camera calibration can correct for lens distortion and improve the accuracy of measurements.

Overall, determining parameters such as focal length, principal point, and lens distortion coefficients is essential for camera calibration in computer vision. This process enables accurate measurements, object recognition, and mapping, enhancing the performance of computer vision algorithms.

Cleaning Camera Battery Contacts: A Step-by-Step Guide

You may want to see also

Calibration is essential to correct lens distortions and enable precise measurements in computer vision tasks

Camera calibration is a fundamental task in computer vision, with applications in 3D reconstruction, object tracking, augmented reality, and image analysis. It is a process of determining specific camera parameters, such as focal length, principal point, and lens distortion coefficients, to ensure accurate measurements and reliable analysis.

The camera calibration process involves capturing images of a calibration pattern, such as a checkerboard, with known dimensions. These images are used to extract feature points and match them to corresponding 3D world points. By solving the calibration equations, we can estimate the camera parameters, including intrinsic and extrinsic parameters. Intrinsic parameters refer to the characteristics of the camera itself, such as focal length and optical centre, while extrinsic parameters describe the orientation and location of the camera in relation to a world coordinate system.

Accurate camera calibration ensures that the data acquired from the camera is free from distortions, allowing computer vision systems to extract meaningful insights about real-world objects from the images. It helps establish an accurate relationship between 3D points in the real world and their corresponding 2D projections in the captured image. This is particularly important for tasks such as object recognition, accurate mapping, and measurements in computer vision applications.

In addition, camera calibration is crucial when working with multiple cameras from different manufacturers or reusing algorithms trained with data from different cameras. By calibrating each camera, we can account for individual peculiarities, optical defects, and mounting inaccuracies, ensuring consistent performance across different camera setups.

Transferring Raw Camera Files: A Step-by-Step Guide

You may want to see also

Camera calibration is crucial for robotics applications, where pixel mapping in images is necessary for physical geometry mapping

Camera calibration is a fundamental task in computer vision, and it is crucial for robotics applications. It involves determining specific parameters of a camera to enable accurate mapping between the real world and its digital representation. This process is essential when pixel mapping in images is required for physical geometry mapping, as it ensures the accuracy and reliability of the system.

Cameras are the primary sensors for computer vision, and they need to be calibrated for any type of geometric application. While deep learning has largely replaced classical computer vision for object detection, pixel mapping between images and the physical world still relies on calibrated image sensors for many robotics applications. This is especially important when precise measurements and reliable analysis are required, such as in robotics navigation systems and 3D scene reconstruction.

The calibration process involves estimating both intrinsic and extrinsic camera parameters. Intrinsic parameters include optical characteristics such as focal length, principal point, and lens distortion coefficients, which allow for mapping between pixel coordinates and camera coordinates. On the other hand, extrinsic parameters describe the orientation and location of the camera in relation to a world coordinate system, accounting for rotation and translation.

Accurate camera calibration is essential for robotics applications as it enables precise measurements and reliable analysis. It helps robots understand their environment and perform tasks accurately. For example, in a manufacturing setting, a robot with a calibrated camera can detect and measure objects with high accuracy, ensuring that parts are assembled correctly.

One challenge with camera calibration is maintaining consistency over time. Camera parameters can change due to various factors, such as heat exposure or loading conditions. Therefore, regular recalibration is often necessary to ensure the accuracy of the system. Manual calibration methods exist, but they can be time-consuming and prone to errors. Automated calibration techniques, such as using robotic arms to position calibration targets, have been proposed to improve accuracy and efficiency.

In conclusion, camera calibration plays a crucial role in robotics applications by enabling accurate pixel mapping between images and the physical world. It ensures that robots can perceive and interact with their environment reliably, making it a fundamental aspect of any robotic system that relies on computer vision for navigation and object manipulation tasks.

Unlocking the P30 Camera: Exploring the Different Modes

You may want to see also

Calibration helps improve the performance of computer vision algorithms by providing undistorted images and adhering to projective geometry laws

Calibration is a critical step in computer vision, ensuring that algorithms perform optimally. It involves fine-tuning camera parameters to obtain undistorted images and achieve precise measurements.

Undistorted images are crucial for effective computer vision algorithms. Many algorithms in fields like stereo vision, 3D reconstruction, and panorama stitching assume that the input images are free from distortion. When camera calibration is neglected, the performance of these algorithms suffers significantly.

Camera calibration aims to identify the geometric characteristics of image formation. It entails determining intrinsic and extrinsic parameters. Intrinsic parameters include focal length, optical centre, and lens distortion coefficients. These parameters enable mapping between pixel coordinates and camera coordinates. Extrinsic parameters, on the other hand, describe the camera's orientation and position in a given coordinate system.

By calibrating the camera, we can correct for lens distortions. Lenses introduce various types of distortions, such as radial and tangential distortion. Radial distortion arises from light rays bending more at the edges of a lens than at its centre, with smaller lenses exhibiting greater distortion. Tangential distortion occurs when the lens and image plane are not parallel. Calibration helps us quantify these distortions and apply corrective measures.

Additionally, camera calibration adheres to the laws of projective geometry. These laws govern the behaviour of image features in relation to the camera's projection. By understanding and applying these laws, we can improve the accuracy of computer vision algorithms.

In summary, camera calibration enhances the performance of computer vision algorithms by providing undistorted images and ensuring compliance with projective geometry laws. It enables accurate measurements, reliable analysis, and successful applications in fields like 3D reconstruction, object tracking, and augmented reality.

Privacy Protection: Taping Your Computer Camera

You may want to see also

Frequently asked questions

Camera calibration is a process of determining specific parameters of a camera to enable accurate measurements and analysis in computer vision tasks. It involves estimating both intrinsic and extrinsic parameters to correct for distortions and ensure reliable results.

Camera calibration is crucial as it enhances the performance of computer vision algorithms. These algorithms often assume undistorted images as input, and if the camera specifications are inaccurate, the results can be unreliable. By calibrating the camera, we can improve the accuracy of object detection, 3D reconstruction, and other computer vision applications.

Camera calibration offers several advantages, including:

- Accurate measurements: Calibration helps correct lens distortions and establish a precise relationship between 3D objects and their 2D projections, enabling accurate measurements in computer vision applications.

- Improved object recognition: By calibrating the camera, algorithms can more effectively identify and track objects, enhancing the overall performance of computer vision systems.

- Enhanced mapping: Calibration assists in accurately mapping image coordinates to real-world measurements, facilitating better spatial understanding for applications like robotics and navigation.